Table of Contents

This tutorial provides a guidance on how to exchange data between GAMS and various Database Management System.

Data Exchange with DB2

DB2 is one of IBM's relational database management systems.

Import from DB2

DB2 has an EXPORT command that can be used to generate comma delimited files. An example of a DB2 session illustrating this is shown below:

------------------------------------- Command Entered ------------------------------------- describe table db2admin.dist ; ------------------------------------------------------------------------------------------- Column Type Type name schema name Length Scale Nulls ------------------------------ --------- ------------------ -------- ----- ----- LOCA SYSIBM VARCHAR 10 0 No LOCB SYSIBM VARCHAR 10 0 No DISTANCE SYSIBM DOUBLE 8 0 Yes 3 record(s) selected. ------------------------------------- Command Entered ------------------------------------- select * from dist ; ------------------------------------------------------------------------------------------- LOCA LOCB DISTANCE ---------- ---------- ------------------------ seattle new-york +2.50000000000000E+000 seattle chicago +1.70000000000000E+000 seattle topeka +1.80000000000000E+000 san-diego new-york +2.50000000000000E+000 san-diego chicago +1.80000000000000E+000 san-diego topeka +1.40000000000000E+000 6 record(s) selected. ------------------------------------- Command Entered ------------------------------------- export to c:\tmp\export.txt of del select * from dist ; ------------------------------------------------------------------------------------------- SQL3104N The Export utility is beginning to export data to file "c:\tmp\export.txt". SQL3105N The Export utility has finished exporting "6" rows. Number of rows exported: 6

The resulting data file export.txt will look like:

"seattle","new-york",+2.50000000000000E+000 "seattle","chicago",+1.70000000000000E+000 "seattle","topeka",+1.80000000000000E+000 "san-diego","new-york",+2.50000000000000E+000 "san-diego","chicago",+1.80000000000000E+000 "san-diego","topeka",+1.40000000000000E+000

This file can be read into GAMS using $include :

parameter d(i,j) 'distance in thousands of miles' /

$ondelim

$include export.txt

$offdelim

/;

display d;

Export to DB2

DB2 has an IMPORT command that can read delimited files. As an example consider the file generated by GAMS PUT statements:

"seattle","new-york",50.00 "seattle","chicago",300.00 "seattle","topeka",0.00 "san-diego","new-york",275.00 "san-diego","chicago",0.00 "san-diego","topeka",275.00

A transcript of a DB2 session to read this file, is given below:

------------------------------------- Command Entered -------------------------------------

create table results(loca varchar(10) not null,

locb varchar(10) not null,

shipment double not null) ;

-------------------------------------------------------------------------------------------

DB20000I The SQL command completed successfully.

------------------------------------- Command Entered -------------------------------------

import from c:\tmp\import.txt of del insert into results ;

-------------------------------------------------------------------------------------------

SQL3109N The utility is beginning to load data from file "c:\tmp\import.txt".

SQL3110N The utility has completed processing. "6" rows were read from the

input file.

SQL3221W ...Begin COMMIT WORK. Input Record Count = "6".

SQL3222W ...COMMIT of any database changes was successful.

SQL3149N "6" rows were processed from the input file. "6" rows were

successfully inserted into the table. "0" rows were rejected.

Number of rows read = 6

Number of rows skipped = 0

Number of rows inserted = 6

Number of rows updated = 0

Number of rows rejected = 0

Number of rows committed = 6

For very large data sets it is advised to use the LOAD command:

------------------------------------- Command Entered ------------------------------------- load from c:\tmp\import.txt of del insert into results ; ------------------------------------------------------------------------------------------- SQL3501W The table space(s) in which the table resides will not be placed in backup pending state since forward recovery is disabled for the database. SQL3109N The utility is beginning to load data from file "c:\tmp\import.txt". SQL3500W The utility is beginning the "LOAD" phase at time "03-20-2000 18:11:50.213782". SQL3519W Begin Load Consistency Point. Input record count = "0". SQL3520W Load Consistency Point was successful. SQL3110N The utility has completed processing. "6" rows were read from the input file. SQL3519W Begin Load Consistency Point. Input record count = "6". SQL3520W Load Consistency Point was successful. SQL3515W The utility has finished the "LOAD" phase at time "03-20-2000 18:11:50.337092". Number of rows read = 6 Number of rows skipped = 0 Number of rows loaded = 6 Number of rows rejected = 0 Number of rows deleted = 0 Number of rows committed = 6

For smaller data sets one can also generate a series of INSERT statements using the PUT facility.

Data Exchange with MS Access

Microsoft Office Access, previously known as Microsoft Access, is a relational database management system from Microsoft. It is a member of the Microsoft Office system.

Import from MS Access

MDB2GMS

MDB2GMS is a tool to convert data from an Microsoft Access database into GAMS readable format. The source is an MS Access database file (*.mdb or *.accdb) and the target is a GAMS Include File or a GAMS GDX File. MDB2GMS is part of the GAMS Data eXchange Tools, see documentation for more information.

SQL2GMS

SQL2GMS is a tool to convert data from an SQL database into GAMS readable format. The source is any data source accessible through Microsoft's Data Access components including ADO, ODBC and OLEDB. The target is a GAMS Include File or a GAMS GDX File. SQL2GMS is part of the GAMS Data eXchange Tools, see documentation for more information.

CSV Files

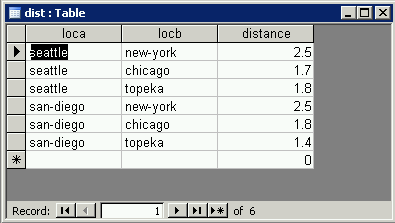

Microsoft Access can export tables into comma delimited text files using its Save As/Export menu. Suppose we have the following table:

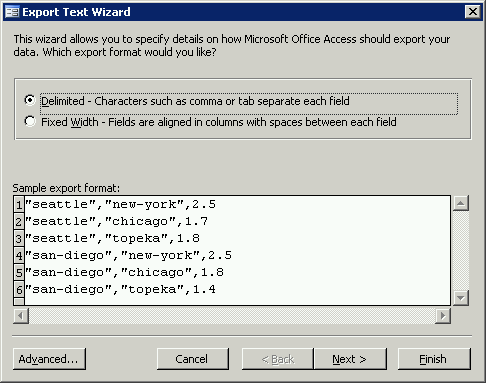

After choosing Save As/Export and selecting Text Files we get the following window:

Just using the default settings, we get the following file:

"seattle","new-york",2.50 "seattle","chicago",1.70 "seattle","topeka",1.80 "san-diego","new-york",2.50 "san-diego","chicago",1.80 "san-diego","topeka",1.40

which can be handled in GAMS by $ondelim/$offdelim and $include:

parameter d(i,j) 'distance in thousands of miles' /

$ondelim

$include dist.txt

$offdelim

/;

display d;

Import Dates from Access

GAMS dates are one day off when importing from MS Access. Suppose we have an MS Access table with one single date column:

datefield ---------- 3/12/2007 3/13/2007 10:00:00 AM 3/14/2007 8:30:00 PM

The date data above can be imported into GAMS using $call and MDB2GMS tool as follows:

$call =mdb2gms I="%system.fp%Sample.accdb" Q="select datefield,Cdbl(datefield) from datetable" O=x.inc

parameter p(*) /

$include x.inc

/;

display p;

alias(*,i);

parameter q(*,*);

loop(i$p(i),

q(i,'year') = gyear(p(i));

q(i,'month') = gmonth(p(i));

q(i,'day') = gday(p(i));

q(i,'hour') = ghour(p(i));

q(i,'minute') = gminute(p(i));

);

display q;

Note that the Cdbl() function converts the date to a floating point number (double precision). The generated include file looks like:

* ----------------------------------------------------- * MDB2GMS Version 2.8, January 2007 * Erwin Kalvelagen, GAMS Development Corp * ----------------------------------------------------- * DAO version: 3.6 * Jet version: 4.0 * Database: D:\mdb2gms\examples\Sample.accdb * Query: select datefield,Cdbl(datefield) from datetable * ----------------------------------------------------- '3/12/2007' 39153 '3/13/2007 10:00:00 AM' 39154.4166666667 '3/14/2007 8:30:00 PM' 39155.8541666667 * -----------------------------------------------------

which looks o.k. However, when we look at the GAMS results in the listing file we see:

---- 28 PARAMETER p

3/12/2007 39153.000, 3/13/2007 10:00:00 AM 39154.417, 3/14/2007 8:30:00 PM 39155.854

---- 39 PARAMETER q

year month day hour minute

3/12/2007 2007.000 3.000 13.000

3/13/2007 10:00:00 AM 2007.000 3.000 14.000 10.000

3/14/2007 8:30:00 PM 2007.000 3.000 15.000 20.000 30.000

Clearly the dates are off by one day: see the column day. We can fix this problem in different places, e.g. in the query or in the GAMS model by subtracting 1.0 from an imported date. This problem occurs not only in MS Access but also with other software packages.

Export to MS Access

GDX2ACCESS

GDX2ACCESS is a tool to dump the whole contents of a GDX file to a new MS Access file (.mdb or .accdb file). GDX2ACCESS is part of the GAMS Data eXchange Tools, see documentation for more information.

GDXVIEWER

Access tables in MS Access files can be directly generated by the GDXVIEWER tool. The GDXVIEWER tool uses OLE automation to export data to an MS Access database. This means that MS Access needs to be installed for the Access Export facility to work. GDXVIEWER is part of the GAMS Data eXchange Tools, see documentation for more information.

VBScript

VBScript is a scripting tool that can be used to talk to COM objects. In this case we use it to tell Access to import a CSV file.

$ontext

Import a table into MS Access using VBscript

$offtext

$if exist new.accdb $call del new.accdb

set i /i1*i10/;

alias (i,j);

parameter p(i,j);

p(i,j) = uniform(-100,100);

display p;

file f /data.csv/;

f.pc=5;

put f,'i','j','p'/;

loop((i,j),

put i.tl, j.tl, p(i,j):12:8/

);

putclose;

execute "=cscript access.vbs";

$onecho > access.vbs

'this is a VBscript script

WScript.Echo "Running script: access.vbs"

dbLangGeneral = ";LANGID=0x0409;CP=1252;COUNTRY=0"

strSQL = "SELECT * INTO mytable FROM [Text;HDR=Yes;Database=%system.fp%;FMT=Delimited].[data#csv]"

Wscript.Echo "Query : " & strSQL

Set oJet = CreateObject("DAO.DBEngine.36")

Wscript.Echo "Jet version : " & oJet.version

Set oDB = oJet.createDatabase("new.accdb",dbLangGeneral)

Wscript.Echo "Created : " & oDB.name

oDB.Execute strSQL

Set TableDef = oDB.TableDefs("mytable")

Wscript.Echo "Rows inserted in mytable : " & TableDef.RecordCount

oDB.Close

Wscript.Echo "Done"

$offecho

The CSV file contains a header row with the names of the fields:

"i","j","p" "i1","i1",-65.65057360 "i1","i2",68.65334160 "i1","i3",10.07507120 "i1","i4",-39.77241920 "i1","i5",-41.55757660 ....

The text driver specification HDR=Yes makes sure the first row in the CSV file is treated specially. The log will look like:

U:\temp>gams vbaccess.gms --- Job vbaccess.gms Start 01/28/08 16:57:37 GAMS Rev 149 Copyright (C) 1987-2007 GAMS Development. All rights reserved ... --- Starting compilation --- vbaccess.gms(4) 2 Mb --- call del new.accdb --- vbaccess.gms(38) 3 Mb --- Starting execution: elapsed 0:00:00.109 --- vbaccess.gms(18) 4 Mb Microsoft (R) Windows Script Host Version 5.6 Copyright (C) Microsoft Corporation 1996-2001. All rights reserved. Running script: access.vbs Query : SELECT * INTO mytable FROM [Text;HDR=Yes;Database=U:\temp\;FMT=Delimited ].[data#csv] Jet version : 3.6 Created : U:\temp\new.accdb Rows inserted in mytable : 100 Done --- Putfile f U:\temp\data.csv *** Status: Normal completion --- Job vbaccess.gms Stop 01/28/08 16:57:38 elapsed 0:00:00.609 U:\temp>

Please note that although the $onecho/$offecho is at the bottom of the GAMS file, the file access.vbs is created at compile time. I.e. before the executable statements like PUT, EXECUTE are executed.

JScript

The same script using JScript is similar to the one with VScript. We only price the script itself.

$ontext

Import a table into MS Access using JScript

$offtext

$if exist new.accdb $call del new.accdb

set i /i1*i10/;

alias (i,j);

parameter p(i,j);

p(i,j) = uniform(-100,100);

display p;

file f /data.csv/;

f.pc=5;

put f,'i','j','p'/;

loop((i,j),

put i.tl, j.tl, p(i,j):12:8/

);

putclose;

execute "=cscript access.js";

$onecho > access.js

// this is a JScript script

WScript.Echo("Running script: access.js");

dbLangGeneral = ";LANGID=0x0409;CP=1252;COUNTRY=0";

strSQL = "SELECT * INTO mytable FROM [Text;HDR=Yes;Database=.;FMT=Delimited].[data#csv]";

WScript.Echo("Query : ",strSQL);

oJet = new ActiveXObject("DAO.DBEngine.36");

WScript.Echo("Jet version : ",oJet.version);

oDB = oJet.createDatabase("new.accdb",dbLangGeneral);

WScript.Echo("Created : ",oDB.name);

oDB.Execute(strSQL);

TableDef = oDB.TableDefs("mytable");

WScript.Echo("Rows inserted in mytable : ",TableDef.RecordCount);

oDB.Close();

WScript.Echo("Done");

$offecho

Combining GDX2ACCESS and VBscript

Data in a GDX file do not contain domain information. I.e. a parameter c(i,j) is really stored as c(*,*). As a result GDX2ACCESS will invent field names like dim1, dim2, Value. In some cases this may not be convenient, e.g. when more descriptive field names are required. We will show how a small script in VBscript can handle this task. The script will rename the fields dim1, dim2, Value in table c to i, j, and transportcost.

$call "gamslib 1"

$include trnsport.gms

*

* export to gdx file.

* The domains i,j are lost: gdx only stores c(*,*)

execute_unload "c.gdx",c;

*

* move to access database

* column names are dim1,dim2

*

execute "=gdx2access c.gdx";

*

* rename columns

*

execute "=cscript access.vbs";

$onecho > access.vbs

'this is a VBscript script

WScript.Echo "Running script: access.vbs"

' Office 2000 DAO version

' Change to local situation.

Set oDAO = CreateObject("DAO.DBEngine.36")

script.Echo "DAO version : " & oDAO.version

Set oDB = oDAO.openDatabase("%system.fp%c.accdb")

Wscript.Echo "Opened : " & oDB.name

Set oTable = oDB.TableDefs.Item("c")

Wscript.Echo "Table : " & oTable.name

' rename fields

oTable.Fields.Item("dim1").name = "i"

oTable.Fields.Item("dim2").name = "j"

oTable.Fields.Item("Value").name = "transportcost"

Wscript.Echo "Renamed fields"

oDB.Close

Wscript.Echo "Done"

$offecho

The above VBscript fragment needs to be adapted according to the DAO Data Access Objects version available on the client machine. This can be implemented in a more robust fashion by letting MS Access find the DAO engine:

'this is a VBscript script

WScript.Echo "Running script: access.vbs"

set oa = CreateObject("Access.Application")

set oDAO = oa.DBEngine

Wscript.Echo "DAO Version: " & oDAO.version

Set oDB = oDAO.openDatabase("%system.fp%c.accdb")

Wscript.Echo "Opened : " & oDB.name

Set oTable = oDB.TableDefs.Item("c")

Wscript.Echo "Table : " & oTable.name

' rename fields

oTable.Fields.Item("dim1").name = "i"

oTable.Fields.Item("dim2").name = "j"

oTable.Fields.Item("Value").name = "transportcost"

Wscript.Echo "Renamed fields"

oDB.Close

Wscript.Echo "Done"

Please note that the macro %system.fp% is replaced by GAMS by the working directory (this is the project directory when running GAMS from the IDE).

Data Exchange with MySQL

MySQL is a multi-threaded, multi-user SQL database management system.

Import from MySQL

MySQL can write the results of a SELECT statement to a file as follows:

mysql> select * from dist;

+-----------+----------+----------+

| loca | locb | distance |

+-----------+----------+----------+

| seattle | new-york | 50 |

| seattle | chicago | 300 |

| seattle | topeka | 0 |

| san-diego | new-york | 275 |

| san-diego | chicago | 0 |

| san-diego | topeka | 275 |

+-----------+----------+----------+

6 rows in set (0.01 sec)

mysql> select * from dist into outfile '/tmp/data.csv'

-> fields terminated by ','

-> optionally enclosed by '"'

-> lines terminated by '\n';

Query OK, 6 rows affected (0.00 sec)

The resulting CSV file looks like:

"seattle","new-york",50 "seattle","chicago",300 "seattle","topeka",0 "san-diego","new-york",275 "san-diego","chicago",0 "san-diego","topeka",275

which can be read by GAMS directly. This approach can be automated as follows:

[erwin@localhost erwin]$ cat myscript

use test

select * from dist into outfile '/tmp/data.csv'

fields terminated by ','

optionally enclosed by '"'

lines terminated by '\n';

[erwin@localhost erwin]$ cat x.gms

set i /seattle, san-diego/;

set j /new-york, chicago, topeka/;

$call 'mysql -u root < myscript'

parameter dist(i,j) /

$ondelim

$include /tmp/data.csv

$offdelim

/;

display dist;

[erwin@localhost erwin]$ gams x

GAMS Rev 132 Copyright (C) 1987-2002 GAMS Development. All rights reserved

Licensee: GAMS Development Corporation, Washington, DC G871201:0000XX-XXX

Free Demo, 202-342-0180, sales@gams.com, www.gams.com DC9999

--- Starting compilation

--- x.gms(5) 1 Mb

--- call mysql -u root < myscript

--- .data.csv(6) 1 Mb

--- x.gms(15) 1 Mb

--- Starting execution

--- x.gms(18) 1 Mb

*** Status: Normal completion

[erwin@localhost erwin]$

The listing file shows that the table is read correctly:

1

2 set i /seattle, san-diego/;

3 set j /new-york, chicago, topeka/;

4

6 parameter dist(i,j) /

INCLUDE /tmp/data.csv

9 "seattle","new-york",50

10 "seattle","chicago",300

11 "seattle","topeka",0

12 "san-diego","new-york",275

13 "san-diego","chicago",0

14 "san-diego","topeka",275

16 /;

17

18 display dist;

19

20

21

SEQ GLOBAL TYPE PARENT LOCAL FILENAME

1 1 INPUT 0 0 /home/erwin/x.gms

2 5 CALL 1 5 mysql -u root < myscript

3 8 INCLUDE 1 8 ./tmp/data.csv

---- 18 PARAMETER dist

new-york chicago topeka

seattle 50.000 300.000

san-diego 275.000 275.000

Instead of maintaining the MySQL script in a separate file, it can also be written by GAMS using $onecho/$offecho and a statement like:

$onecho > myscript

use test

select * from dist into outfile '/tmp/data.csv'

fields terminated by ','

optionally enclosed by '"'

lines terminated by '\n';

$offecho

This will write the script at compile time.

Export to MySQL

GAMS can export data to MySQL by creating a script containing a series of SQL INSERT statements, as shown in section Oracle CSV Import .

It is noted that MySQL does have a REPLACE statement which is a useful blend of an INSERT and UPDATE statement: update a row if it already exists, otherwise insert it. This is not standard SQL however, so it can cause problems when moving to another database.

For larger result sets it may be better to use the LOAD DATA INFILE command. This command can read directly ASCII text files such as comma delimited CSV files.

Consider again the data file created by the PUT statement:

"seattle","new-york",50.00 "seattle","chicago",300.00 "seattle","topeka",0.00 "san-diego","new-york",275.00 "san-diego","chicago",0.00 "san-diego","topeka",275.00

The following transcript shows how to import this into MySQL:

myql> create table dist(loca varchar(10), locb varchar(10), distance double precision);

Query OK, 0 rows affected (0.00 sec)

mysql> show tables;

+----------------+

| Tables_in_test |

+----------------+

| dist |

+----------------+

1 row in set (0.00 sec)

mysql> describe dist;

+----------+-------------+------+-----+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+----------+-------------+------+-----+---------+-------+

| loca | varchar(10) | YES | | NULL | |

| locb | varchar(10) | YES | | NULL | |

| distance | double | YES | | NULL | |

+----------+-------------+------+-----+---------+-------+

3 rows in set (0.00 sec)

mysql> load data infile '/tmp/data.txt' into table dist

-> fields terminated by ','

-> optionally enclosed by '"'

-> lines terminated by '\n';

Query OK, 6 rows affected (0.00 sec)

Records: 6 Deleted: 0 Skipped: 0 Warnings: 0

mysql> select * from dist;

+-----------+----------+----------+

| loca | locb | distance |

+-----------+----------+----------+

| seattle | new-york | 50 |

| seattle | chicago | 300 |

| seattle | topeka | 0 |

| san-diego | new-york | 275 |

| san-diego | chicago | 0 |

| san-diego | topeka | 275 |

+-----------+----------+----------+

6 rows in set (0.00 sec)

Note that we used no keys in our table definition. In practice it is of course highly recommended to define proper keys.

Data Exchange with Oracle

The Oracle Database (commonly referred to as Oracle RDBMS or simply as Oracle) is a relational database management system (RDBMS) software product released by Oracle Corporation.

Import from Oracle

SQL*Plus

To export an Oracle table a simple solution is to write an SQL*Plus script. E.g. if our table looks like:

SQL> describe dist; Name Null? Type ----------------------------------- -------- ------------------------ LOCA NOT NULL VARCHAR2(10) LOCB NOT NULL VARCHAR2(10) DISTANCE NUMBER SQL> select * from dist; LOCA LOCB DISTANCE ---------- ---------- --------- seattle new-york 2.5 seattle chicago 1.7 seattle topeka 1.8 san-diego new-york 2.5 san-diego chicago 1.8 san-diego topeka 1.4 6 rows selected. SQL>

then the following script will export this table:

set pagesize 0 set pause off set heading off spool data select loca||','||locb||','||distance from dist; spool off

The resulting data file ''data.lst'' will look like:

seattle,new-york,2.5 seattle,chicago,1.7 seattle,topeka,1.8 san-diego,new-york,2.5 san-diego,chicago,1.8 san-diego,topeka,1.4

This almost looks like our data initialization syntax for parameters:

SEATTLE.NEW-YORK 2.5 SAN-DIEGO.NEW-YORK 2.5 SEATTLE.CHICAGO 1.7 SAN-DIEGO.CHICAGO 1.8 SEATTLE.TOPEKA 1.8 SAN-DIEGO.TOPEKA 1.4

The only differences are in the delimiters that are being used. These differences are easily digested by GAMS once it is in ondelim mode. I.e. the following syntax can be used to read the data.lst file:

parameter d(i,j) 'distance in thousands of miles' /

$ondelim

$include data.lst

$offdelim

/;

display d;

SQL2GMS

An alternative way to import data from Oracle is to use the tool SQL2GMS which can talk to any database with an ADO or ODBC interface.

Import dates from Oracle databases and converting them to GAMS dates

For most softwares it is easy to generate dates that GAMS can import and understand. The most common issue is that GAMS is one day off compared to Excel, Delphi, Access, ODBC etc. Oracle is somewhat more involved. First it is useful to have the date/time exported as a Julian date. This can be done with the following stored procedure:

-- julian representation of a date/time -- Erwin Kalvelagen, feb 2007 create or replace function to_julian(d IN TIMESTAMP) return number is begin return to_number(to_char(d,'J')) + to_number(to_char(d,'SSSSS'))/86400; end;

This function can be used to export dates as simple floating point numbers. In GAMS we need just a simple adjustment by adding a constant ''datediff'' defined by:

scalar

refdategams "march 16, 2006, 00:00"

refdateoracle "march 16, 2006, 00:00" /2453811/

datediff "difference between GAMS and Oracle date"

;

refdategams = jdate(2006,3,16);

datediff = refdategams-refdateoracle;

This trick has been applied in a complex scheduling application where dates are important data types that must be exchanged between the application logic and database tier and the optimization engine.

Export to Oracle

Oracle CSV Import

A familiar way of moving data into Oracle is to generate standard SQL INSERT statements. The PUT facility is flexible enough to handle this. For instance the following code:

file results /results.sql/;

results.lw=0;

results.nw=0;

put results;

loop((i,j),

put "insert into result (loca, locb, shipment) ";

put "values ('",i.tl,"','",j.tl,"',",x.l(i,j),");"/

);

putclose;

will generate these SQL statements:

insert into result (loca, locb, shipment) values ('seattle','new-york',50.00);

insert into result (loca, locb, shipment) values ('seattle','chicago',300.00);

insert into result (loca, locb, shipment) values ('seattle','topeka',0.00);

insert into result (loca, locb, shipment) values ('san-diego','new-york',275.00);

insert into result (loca, locb, shipment) values ('san-diego','chicago',0.00);

insert into result (loca, locb, shipment) values ('san-diego','topeka',275.00);

The .lw and .nw attributes for the put file indicate that no extra spaces around the labels and the numeric values are needed. These field width attributes have a default value of 12 which would cause the values to look like:

'seattle ','new-york ', 50.00

If the amount of data is large the utility SQL*Loader can be used to import comma delimited input. I.e. the GAMS code:

file results /results.txt/;

results.pc=5;

put results;

loop((i,j),

put i.tl, j.tl, x.l(i,j)/

);

putclose;

produces a file results.txt:

"seattle","new-york",50.00

"seattle","chicago",300.00

"seattle","topeka",0.00

"san-diego","new-york",275.00

"san-diego","chicago",0.00

"san-diego","topeka",275.00

The following SQL*Loader control file will read this file:

LOAD DATA INFILE results.txt INTO TABLE result FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '"' (loca,locb,shipment)

GDX to Oracle

Database tables in an SQL RDBMS can be directly generated by the GDXVIEWER tool. The GDXVIEWER can use three methods to export to Oracle and other RDBMS:

- The direct ADO/ODBC link can create a new table and populate it.

- The SQL INSERT script generator can create a script with a number of

INSERTstatements. - The SQL

UPDATEscript generator can create a script with a number of UPDATE statements.

Data Exchange with SQL Server

Import from SQL Server

Microsoft SQL Server is Microsoft's flagship database. It comes in different flavors, including SQL Server, MSDE and SQL Server Express.

Using SQL2GMS

A good way to import SQL server data into GAMS is using the SQL2GMS tool. Below is an example of its use:

$set commandfile commands.txt

$onecho > %commandfile%

C=provider=sqloledb;data source=athlon\SQLExpress;Initial catalog=test;user id=sa;password=password

O=C:\WINNT\gamsdir\xx.inc

Q=SELECT * FROM x

$offecho

$call =sql2gms @%commandfile%

parameter p(i,j) /

$include "C:\WINNT\gamsdir\xx.inc"

/;

display p;

Using the BCP utilty and CSV files

To export SQL Server data to CSV files we can use the BCP utility.

C:\Program Files\Microsoft SQL Server\90\Tools\binn>bcp test..results out x.csv \ -S athlon\sqlexpress -c -U sa -P password -t, Starting copy... 6 rows copied. Network packet size (bytes): 4096 Clock Time (ms.) Total : 10 Average : (600.00 rows per sec.) C:\Program Files\Microsoft SQL Server\90\Tools\binn>type x.csv seattle,new-york,50.0 seattle,chicago,300.0 seattle,topeka,0.0 san-diego,new-york,275.0 san-diego,chicago,0.0 san-diego,topeka,275.0

It is somewhat more difficult to create a proper CSV file. A format specification file can help here. For an example see the next section on Data Exchange with Sybase . Other tools to export files include DTS (Data Transformation Services) and linked ODBC data sources.

A direct interface between SQL server tables and GAMS GDX files

Finally we can program directly an interface between SQL server tables and GAMS GDX files. A small example in C# can look like:

Export to SQL Server

SQL Server has two basic facilities to import CSV files: the BCP tool and the BULK INSERT statement. Advanced SQL Server users may also be able to use DTS (Data Transformation Services) or linked ODBC data sources. Of course for small data sets we can create standard SQL INSERT statements. In addition the tool GDXVIEWER can be used to get GAMS data into SQL Server.

Export using the BCP tool

A transcript showing the use of BCP is shown below:

C:\Program Files\Microsoft SQL Server\90\Tools\binn>sqlcmd -S athlon\SQLExpress 1> use test; 2> create table x(loca varchar(10), locb varchar(10), shipment float); 3> go Changed database context to 'test'. 1> quit C:\Program Files\Microsoft SQL Server\90\Tools\binn>type c:\winnt\gamsdir\results.csv seattle,new-york,50.00 seattle,chicago,300.00 seattle,topeka,0.00 san-diego,new-york,275.00 san-diego,chicago,0.00 san-diego,topeka,275.00 C:\Program Files\Microsoft SQL Server\90\Tools\binn>bcp test..x in c:\winnt\gamsdir\results.csv \ -S athlon\sqlexpress -c -U sa -P password -t, Starting copy... 6 rows copied. Network packet size (bytes): 4096 Clock Time (ms.) Total : 10 Average : (600.00 rows per sec.) C:\Program Files\Microsoft SQL Server\90\Tools\binn>sqlcmd -S athlon\SQLExpress 1> use test 2> select * from x; 3> go Changed database context to 'test'. loca locb shipment ---------- ---------- ------------------------ seattle new-york 50 seattle chicago 300 seattle topeka 0 san-diego new-york 275 san-diego chicago 0 san-diego topeka 275 (6 rows affected) 1> quit

Unfortunately, dealing with quoted strings is not straightforward with this tool (an example using a format file is shown in the next section on Data Exchange with Sybase). The same thing holds for BULK INSERT, which can read:

C:\Program Files\Microsoft SQL Server\90\Tools\binn>type c:\winnt\gamsdir\results.csv seattle,new-york,50.00 seattle,chicago,300.00 seattle,topeka,0.00 san-diego,new-york,275.00 san-diego,chicago,0.00 san-diego,topeka,275.00 C:\Program Files\Microsoft SQL Server\90\Tools\binn>sqlcmd -S athlon\SQLExpress 1> use test 2> create table x(loca varchar(10), locb varchar(10), shipment float) 3> go Changed database context to 'test'. 1> bulk insert x from 'c:\winnt\gamsdir\results.csv' with (fieldterminator=',') 2> go (6 rows affected) 1> select * from x 2> go loca locb shipment ---------- ---------- ------------------------ seattle new-york 50 seattle chicago 300 seattle topeka 0 san-diego new-york 275 san-diego chicago 0 san-diego topeka 275 (6 rows affected) 1> quit C:\Program Files\Microsoft SQL Server\90\Tools\binn>

Export using the ODBC Text Driver

A slower but flexible way to load CSV files is to use a linked server through the ODBC Text Driver. First create an ODBC DSN using the Text Driver. This can be done through the ODBC Data Source Administrator Data Sources (ODBC) Then we can use the system procedure SP_AddLinkedServer.

C:\Program Files\Microsoft SQL Server\90\Tools\binn>type c:\winnt\gamsdir\results.csv "seattle","new-york",50.00 "seattle","chicago",300.00 "seattle","topeka",0.00 "san-diego","new-york",275.00 "san-diego","chicago",0.00 "san-diego","topeka",275.00 C:\Program Files\Microsoft SQL Server\90\Tools\binn>type trnsport.sql -- -- test database -- use test -- -- create table in SQL server -- create table results(loca varchar(10), locb varchar(10), ship float) GO -- -- Create a linked server -- EXEC sp_addlinkedserver txtsrv,'Jet 4.0','Microsoft.Jet.OLEDB.4.0','c:\winnt\gamsdir',NULL,'Text' GO -- -- copy data from text file c:\winnt\gamsdir\results.csv -- insert into results(loca,locb,ship) select * from txtsrv...results#csv go -- -- check if all arrived -- select * from results go -- -- release linked server -- EXEC sp_dropserver txtsrv GO -- -- clean up -- drop table results go C:\Program Files\Microsoft SQL Server\90\Tools\binn>sqlcmd -S athlon\sqlexpress 1> :r trnsport.sql Changed database context to 'test'. (6 rows affected) loca locb ship ---------- ---------- ------------------------ seattle new-york 50 seattle chicago 300 seattle topeka 0 san-diego new-york 275 san-diego chicago 0 san-diego topeka 275 (6 rows affected) 1> quit C:\Program Files\Microsoft SQL Server\90\Tools\binn>

A slightly different approach is the following:

C:\Program Files\Microsoft SQL Server\90\Tools\binn>sqlcmd -S athlon\sqlexpress

1> create table t(loca varchar(10), locb varchar(10), ship float)

2> go

1> insert into t

2> select * from

3> OpenRowSet('Microsoft.Jet.OLEDB.4.0',

4> 'Text;Database=c:\winnt\gamsdir\;HDR=NO',

5> 'select * from results.csv')

6> go

(6 rows affected)

1> select * from t

2> go

loca locb ship

---------- ---------- ------------------------

seattle new-york 50

seattle chicago 300

seattle topeka 0

san-diego new-york 275

san-diego chicago 0

san-diego topeka 275

(6 rows affected)

1> drop table t

2> go

1> quit

C:\Program Files\Microsoft SQL Server\90\Tools\binn>

This can be automated from GAMS as follows:

file results /results.csv/;

results.pc=5;

put results;

loop((i,j),

put i.tl, j.tl, x.l(i,j)/

);

putclose;

file sqlinsert /insert.sql/;

put sqlinsert;

put "use test"/;

put "insert into t select * from OpenRowSet('Microsoft.Jet.OLEDB.4.0',"

"'Text;Database=c:\winnt\gamsdir\;HDR=NO','select * from results.csv')"/;

putclose;

execute '="C:\Progra~1\Microsoft SQL Server\90\Tools\binn\sqlcmd" -S athlon\SQLExpress -i insert.sql';

Export using the GDXVIEWER

When using GDXVIEWER to export data to MS SQL server it is noted that MSSQL Server does not accept the default SQL type double for double precision numbers. You will need to set this setting to float or double precision.

When we export variable x from the trnsport model, we see:

C:\Program Files\Microsoft SQL Server\90\Tools\binn>sqlcmd -S athlon\SQLExpress 1> use test 2> go Changed database context to 'test'. 1> select * from x 2> go dim1 dim2 level ------------------------------- ------------------------------- ------------------------ seattle new-york 50 seattle chicago 300 seattle topeka 0 san-diego new-york 275 san-diego chicago 0 san-diego topeka 275 (6 rows affected) 1> quit C:\Program Files\Microsoft SQL Server\90\Tools\binn>

Data Exchange with SQLite

See GDX2SQLITE for more information.

Data Exchange with Sybase

Import from Sybase

Import using the bcp utility

Sybase is largely the same as SQL Server. For exporting ASCII files from a Sybase table, the utility (Using the BCP utilty and CSV files) can be used.

An example of use of this utility is shown below:

[erwin@fedora sybase]$ isql -U sa -S LOCALHOST -D testdb -P sybase -J iso_1 1> select * from results 2> go loca locb shipment ---------- ---------- -------------------- seattle new-york 50.000000 seattle chicago 300.000000 seattle topeka 0.000000 san-diego new-york 275.000000 san-diego chicago 0.000000 san-diego topeka 275.000000 (6 rows affected) 1> quit [erwin@fedora sybase]$ cat bcp.fmt 10.0 4 1 SYBCHAR 0 0 "\"" 1 loca 2 SYBCHAR 0 10 "\",\"" 1 loca 3 SYBCHAR 0 10 "\"," 2 locb 4 SYBCHAR 0 17 "\n" 3 shipment [erwin@fedora sybase]$ bcp testdb..results out res.txt -S LOCALHOST -U sa -P sybase \ -J iso_1 -f bcp.fmt Starting copy... 6 rows copied. Clock Time (ms.): total = 1 Avg = 0 (6000.00 rows per sec.) [erwin@fedora sybase]$ cat res.txt "seattle","new-york",50.0 "seattle","chicago",300.0 "seattle","topeka",0.0 "san-diego","new-york",275.0 "san-diego","chicago",0.0 "san-diego","topeka",275.0 [erwin@fedora sybase]$

Note: the first column in the format file is a dummy (it has length 0). This is in order to write the leading quote, as bcp only allows for termination symbols.

This can be automated using the following GAMS code:

sets i 'canning plants' / seattle, san-diego / j 'markets' / new-york, chicago, topeka / ; $onecho > bcp.fmt 10.0 4 1 SYBCHAR 0 0 "\"" 1 loca 2 SYBCHAR 0 10 "\",\"" 1 loca 3 SYBCHAR 0 10 "\"," 2 locb 4 SYBCHAR 0 17 "\n" 3 shipment $offecho $call "bcp testdb..results out res.txt -S LOCALHOST -U sa -P sybase -J iso_1 -f bcp.fmt" parameter d(i,j) 'distance in thousands of miles' / $ondelim $include res.txt $offdelim /; display d;

Import using the SQL2GMS utility

The SQL2GMS tool uses ADO or ActiveX Data Objects to extract data from relational databases. It can connect to almost any database from any vendor as it supports standards like ODBC. See documentation for more information.

Import using a 'SQL2GMS' VBS script

The following GAMS code will generate and execute a script written in VBScript. It mimics the behavior of SQL2GMS.EXE and can be used for debugging or the script can be passed on to the IT support people in case there are problems with accessing the database.

$ontext

This script mimics SQL2GMS.

Erwin Kalvelagen

November 2006

$offtext

$onecho > sql2gms.vbs

'

' parameters

'

t1 = 3 ' connection timeout

t2 = 0 ' command timeout

c = "Provider=MSDASQL;Driver={SQL Server};Server=DUOLAP\SQLEXPRESS;Database=testdata;

Uid=gams;Pwd=gams;" ' connection string

q = "select * from data" ' query

o = "output.inc" ' the output file to be generated

b = false ' whether to quote indices (e.g. because of embedded blanks)

'

' create ADO connection object

'

set ADOConnection = CreateObject("ADODB.Connection")

ADOVersion = ADOConnection.Version

WScript.Echo "ADO Version:",ADOVersion

'

' make db connection

'

ADOConnection.ConnectionTimeout = t1

ADOConnection.ConnectionString = c

ADOConnection.Open

'

' Open file

'

set fso = CreateObject("Scripting.FileSystemObject")

set outputfile = fso.CreateTextFile(o,True)

outputfile.writeLine "*----------------------------------------------------"

outputfile.writeLine "* SQL2GMS/Vbscript 1.0"

outputfile.writeLine "* Connection:"&c

outputfile.writeLine "* Query:"&q

outputfile.writeLine "*----------------------------------------------------"

'

' setup query

'

starttime = time

ADOConnection.CommandTimeout = t2

const adCmdText = 1

set RecordSet = ADOConnection.Execute(q,,adCmdText)

'

' get results

'

NumberOfFields = RecordSet.Fields.Count

eof = RecordSet.EOF

if eof then

WScript.Echo "No records"

Wscript.quit

end if

'

' loop through records

'

NumberOfRows = 0

do until eof

NumberOfRows = NumberOfRows + 1

Row = RecordSet.GetRows(1)

if NumberOfFields > 1 then

s = Row(0,0)

if b then

s = quotestring(s)

end if

Outputfile.Write s

end if

for i=2 to NumberOfFields-1

s = Row(i-1,0)

if b then

s = quotestring(s)

end if

Outputfile.Write "."

Outputfile.Write s

next

s = Row(NumberOfFields-1,0)

OutputFile.Write " "

OutputFile.Writeline s

eof = RecordSet.EOF

loop

OutputFile.Close

Wscript.echo "Records read:"&NumberOfRows

Wscript.echo "Elapsed time:"&DateDiff("s",starttime,time)&" seconds."

function quotestring(s)

has_single_quotes = false

has_double_quotes = false

needs_quoting = false

'

' check input string for special characters

'

for j=1 to len(s)

ch = Mid(s,j,1)

select case ch

case "'"

has_single_quotes = true

case """"

has_double_quotes = true

case " ","/",";",","

needs_quoting = true

case else

k = asc(ch)

if (k<=31) or (k>=127) then

needs_quoting = true

end if

end select

next

'

' check if we have if gams keyword

'

kw = array("ABORT","ACRONYM","ACRONYMS","ALIAS","BINARY","DISPLAY","ELSE", _

"EQUATION","EQUATIONS","EXECUTE","FILE","FILES","FOR","FREE", _

"IF","INTEGER","LOOP","MODEL","MODELS","NEGATIVE","OPTION", _

"OPTIONS","PARAMETER","PARAMETERS","POSITIVE","PROCEDURE", _

"PROCEDURES","PUT","PUTCLEAR","PUTCLOSE","PUTHD","PUTPAGE", _

"PUTTL","SCALAR","SCALARS","SEMICONT","SET","SETS","SOS1", _

"SOS2","TABLE","VARIABLE","VARIABLES","WHILE")

if not needs_quoting then

for j = 0 to Ubound(kw)

if strcomp(s,kw(j),1)=0 then

needs_quoting = true

exit for

end if

next

end if

'

' already quoted?

'

ch = left(s,1)

select case ch

case "'", """"

quotestring = s

exit function

end select

' check for special case

if has_single_quotes and has_double_quotes then

quotestring = """" & replace(s, """", "'") & """"

elseif has_single_quotes then

quotestring = """" & s & """"

elseif has_double_quotes then

quotestring = "'" & s & "'"

elseif needs_quoting then

quotestring = "'" & s & "'"

else

quotestring = s

end if

end function

$offecho

execute '=cscript sql2gms.vbs';