Table of Contents

Concept

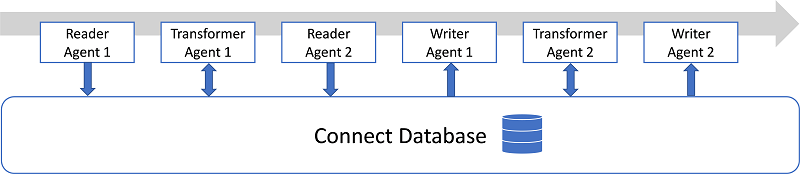

GAMS Connect is a framework inspired by the concept of a so-called ETL (extract, transform, load) procedure that allows to integrate data from various data sources. The GAMS Connect framework consists of the Connect database and the Connect agents that operate on the Connect database. Via the available Connect interfaces the user passes instructions to call Connect agents for reading data from various file types into the Connect database, transforming data in the Connect database, and writing data from the Connect database to various file types. Instructions are passed in YAML syntax. Note that in contrast to a typical ETL procedure, read, transform and write operations do not need to be strictly separated.

Usage

GAMS Connect is available via the GAMS command line parameters ConnectIn and ConnectOut, via embedded code Connect, and as a standalone command line utility gamsconnect.

Instructions processed by the GAMS Connect interfaces need to be passed in YAML syntax as follows:

- <agent name1>:

<root option1>: <value>

<root option2>: <value>

.

.

<root option3>:

- <option1>: <value>

<option2>: <value>

.

.

- <option1>: <value>

<option2>: <value>

.

.

.

.

- <agent name2>:

.

.

.

.

The user lists the instructions to be performed. All individual agent instructions begin at the same indentation level starting with a - (a dash and a space) followed by the Connect agent name and a : (a colon). Connect agent options are represented in a simple <option>: <value> form. Please check the documentation of Connect Agents for available options. Options at the first indentation level are called root options and typically define general settings, e.g. the file name. While some agents only have root options, others have a more complex options structure, where a root option may be a list of dictionaries containing other options. A common example is the root option symbols (see e.g. GDXReader). Via symbols many agents allow to define symbol specific options, e.g. the name of the symbol. The option tables of agents with a more complex options structure provide a Scope to reflect this structure - options may be allowed at the first indentation level (root) and/or are assigned to other root options (e.g. symbols).

Checkout the GAMS Studio Connect Editor that allows creating and editing YAML instructions by a simple drag and drop of agents and corresponding options.

Note that YAML syntax also supports an abbreviated form for lists and dictionary, e.g. <root option3>: [ {<option1>: <value>, <option2>: <value>}, {<option1>: <value>, <option2>: <value>} ].

Here is an example that uses embedded Connect code to process instructions:

$onecho > distance.csv

i;j;distance in miles

seattle;new-york;2,5

seattle;chicago;1,7

seattle;topeka;1,8

san-diego;new-york;2,5

san-diego;chicago;1,8

san-diego;topeka;1,4

$offecho

$onecho > capacity.csv

i,capacity in cases

seattle,350.0

san-diego,600.0

$offecho

Set i 'Suppliers', j 'Markets';

Parameter d(i<,j<) 'Distance', a(i) 'Capacity';

display i, j, d, a;

In this example, we are reading two CSV files distance.csv and capacity.csv using the CSVReader. Then we directly write to symbols in GAMS using the GAMSWriter.

GAMS Studio Connect Editor

The GAMS Studio Connect Editor provides functionalities for creating and editing so-called GAMS Connect files, containing instructions in YAML syntax which will be processed by the GAMS Connect interfaces. With the Connect Editor, the user can create YAML instructions by a simple drag and drop of agents and corresponding options, instead of creating the YAML instructions for Connect manually. The content of the Connect file can also be displayed and edited in plain YAML format using a text editor. See documentation of the Connect Editor for more information.

Connect Agents Overview

Current Connect agents support the following data source formats: CSV, Excel, GDX and SQL. The following Connect agents are available:

| Connect agent | Description | Supported symbol types |

|---|---|---|

| Concatenate | Allows concatenating multiple symbols in the Connect database. | Sets and parameters |

| CSVReader | Allows reading a symbol from a specified CSV file into the Connect database. | Sets and parameters |

| CSVWriter | Allows writing a symbol in the Connect database to a specified CSV file. | Sets and parameters |

| DomainWriter | Allows rewriting the domain information of an existing Connect symbol. | Sets, parameters, variables, and equations |

| ExcelReader | Allows reading symbols from a specified Excel file into the Connect database. | Sets and parameters |

| ExcelWriter | Allows writing symbols in the Connect database to a specified Excel file. | Sets and parameters |

| Filter | Allows to reduce symbol data by applying filters on labels and numerical values. | Sets, parameters, variables, and equations |

| GAMSReader | Allows reading symbols from the GAMS database into the Connect database. | Sets, parameters, variables, and equations |

| GAMSWriter | Allows writing symbols in the Connect database to the GAMS database. | Sets, parameters, variables, and equations |

| GDXReader | Allows reading symbols from a specified GDX file into the Connect database. | Sets, parameters, variables, and equations |

| GDXWriter | Allows writing symbols in the Connect database to a specified GDX file. | Sets, parameters, variables, and equations |

| LabelManipulator | Allows to modify labels of symbols in the Connect database. | Sets, parameters, variables, and equations |

| Projection | Allows index reordering, projection onto a reduced index space, and expansion to an extended index space through index duplication of a GAMS symbol. | Sets, parameters, variables, and equations |

| PythonCode | Allows executing arbitrary Python code. | - |

| RawCSVReader | Allows reading unstructured data from a specified CSV file into the Connect database. | - |

| RawExcelReader | Allows reading unstructured data from a specified Excel file into the Connect database. | - |

| SQLReader | Allows reading symbols from a specified SQL database into the Connect database. | Sets and parameters |

| SQLWriter | Allows writing symbols in the Connect database to a specified SQL database. | Sets and parameters |

Getting Started Examples

We introduce the basic functionalities of GAMS Connect agents on some simple examples. For more examples see section Examples.

CSV

The following example (a modified version of the trnsport model) shows how to read and write CSV files. The full example is part of DataLib as model connect03. Here is a code snippet of the first lines:

$onEcho > distance.csv

i,new-york,chicago,topeka

seattle,2.5,1.7,1.8

san-diego,2.5,1.8,1.4

$offEcho

$onEcho > capacity.csv

i,capacity

seattle,350

san-diego,600

$offEcho

$onEcho > demand.csv

j,demand

new-york,325

chicago,300

topeka,275

$offEcho

Set i 'canning plants', j 'markets';

Parameter d(i<,j<) 'distance in thousands of miles'

a(i) 'capacity of plant i in cases'

b(j) 'demand at market j in cases';

[...]

It starts out with the declaration of sets and parameters. With compile-time embedded Connect code, data for the parameters is read from CSV files using the Connect agent CSVReader. The CSVReader agent, for example, reads the CSV file distance.csv and creates the parameter d in the Connect database. The name of the parameter must be given by the option name. Column number 1 is specified as the first domain set using option indexColumns. The valueColumns option is used to specify the column numbers 2, 3 and 4 containing the values. Per default, the first row of the columns specified via valueColumns will be used as the second domain set. The symbolic constant lastCol can be used if the number of index or value columns is unknown. As a last step, all symbols from the Connect database are written to the GAMS database using the Connect agent GAMSWriter. The GAMSWriter agent makes the parameters d, a and b available outside the embedded Connect code. Note that the sets i and j are defined implicitly through parameter d.

Finally, after solving the transport model, Connect can be used to export results to a CSV file:

[...]

Model transport / all /;

solve transport using lp minimizing z;

This time, we need to use execution-time embedded Connect code. The Connect agent GAMSReader imports variable x into the Connect database. With the Connect agent CSVWriter we write the variable level to the CSV file shipment_quantities.csv:

i_0,new-york,chicago,topeka seattle,50.0,300.0,0.0 san-diego,275.0,0.0,275.0

Setting the option unstack to True allows to use the last dimension as the header row.

Excel

The following example is part of GAMS Model Library as model cta and shows how to read and write Excel spreadsheets. Here is a code snippet of the first lines:

Set

i 'rows'

j 'columns'

k 'planes';

Parameter

dat(k<,i<,j<) 'unprotected data table'

pro(k,i,j) 'information sensitive cells';

* extract data from Excel workbook

[...]

It starts out with the declaration of sets and parameters. With compile-time embedded Connect code, data for the parameters is read from the Excel file cox3.xlsx using the Connect agent ExcelReader. The ExcelReader agent allows reading data for multiple symbols that are listed under the keyword symbols, here, parameter dat and pro. For each symbol, the symbol name is given by option name and the Excel range by option range. The option rowDimension defines that the first two columns of the data range will be used for the labels. In addition, the option columnDimension defines that the first row of the data range will be used for the labels. As a last step, all symbols from the Connect database are written to the GAMS database using the Connect agent GAMSWriter. The GAMSWriter agent makes the parameters dat and pro available outside the embedded Connect code. Note that the sets i, j and k are defined implicitly through parameter dat.

Finally, after solving the cox3c model with alternative solutions, Connect can be used to export results to Excel:

[...]

loop(l$((obj.l - best)/best <= 0.01),

ll(l) = yes;

binrep(s,l) = round(b.l(s));

binrep('','','Obj',l) = obj.l;

binrep('','','mSec',l) = cox3c.resUsd*1000;

binrep('','','nodes',l) = cox3c.nodUsd;

binrep('Comp','Cells','Adjusted',l) = sum((i,j,k)$(not s(i,j,k)), 1$round(adjn.l(i,j,k) + adjp.l(i,j,k)));

solve cox3c min obj using mip;

);

This time, we need to use execution-time embedded Connect code. The Connect agent GAMSReader imports the reporting parameter binrep into the Connect database. With the Connect agent ExcelWriter we write the parameter into the binrep sheet of the Excel file results.xlsx.

SQL

The following example (a modified version of the whouse model) shows how to read from and write to a SQL database (sqlite). The full example is part of DataLib as model connect04. Here is a code snippet of the first lines:

[...]

Set t 'time in quarters';

Parameter

price(t) 'selling price ($ per unit)'

istock(t) 'initial stock (units)';

Scalar

storecost 'storage cost ($ per quarter per unit)'

storecap 'stocking capacity of warehouse (units)';

[...]

It starts out with the declaration of sets and parameters. With compile-time embedded Connect code, data for all the symbols are read from the sqlite database whouse.db using the Connect agent SQLReader by passing the connection url through the option connection. The SQLReader agent, for example, queries the table priceTable for data and creates the parameter price in the Connect database. The SQLReader allows reading data for multiple symbols that are listed under the keyword symbols and are fetched through the same connection. For each symbol the name must be given by the option name. The SQL query statement is passed through the option query. The symbol type can be specified using the option type. By default, every symbol is treated as a GAMS parameter. As a last step, all symbols from the Connect database are written to the GAMS database using the Connect agent GAMSWriter. The GAMSWriter agent makes all read in symbols available outside the embedded Connect code.

Further, after solving the warehouse model, Connect can be used to export the results to tables in the SQL database.

[...]

Model swp 'simple warehouse problem' / all /;

solve swp minimizing cost using lp;

Here, we need to use execution-time embedded Connect code. The Connect agent GAMSReader imports all the variables into the Connect database. The SQLWriter agent then writes each symbol to respective tables in the SQL database whouse.db. For example the stock level:

|t_0 |level | |:-------|:---------| |q-1 |100.0 | |q-2 |0.0 | |q-3 |0.0 | |q-4 |0.0 |

The ifExists option allows to either append to an extending table or replace it with new data. By default, the value for ifExists is set to fails.

Connect Agents

This section provides a reference for the available Connect agents and their options. Each agent provides a table overview of available options that allows to identify the scope of an option as well as its default value.

Each option can be either optional or required:

- If an optional option is omitted, it will automatically receive a default value.

- Required options must be provided, but only if the parent option has been specified. A typical example is the

symbolsoption. By default,symbolsis set toallfor certain agents. However, ifsymbolsis specified as a list of individual symbols (instead ofall), then its child optionnamebecomes required.

- Note

- The option value

nullindicates that an option is missing. For optional options, it is allowed to setnullwhich will have the same effect as omitting the option. This means that even an explicitnullwill result in the default value for this option.

The table overview also allows to determine the scope of an option. In some cases, agents may have the same option available across different scopes, for example the root scope and the symbols scope:

- An omitted option in the

symbolsscope inherits its value from therootscope. Therefore, the technical default of the option in thesymbolsscope isnullwhich indicates that the option is omitted and inherits the value from therootscope. Note that the table overview does not show this technicalnulldefault. - If an option is specified in the

symbolsscope, it will not inherit the value from therootscope.

Concatenate

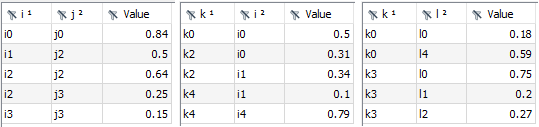

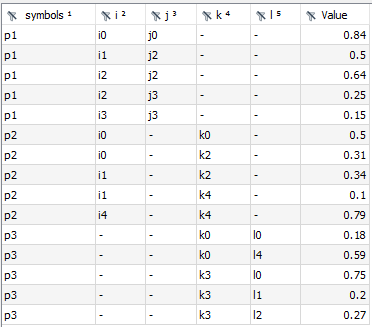

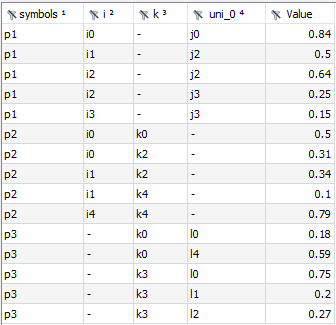

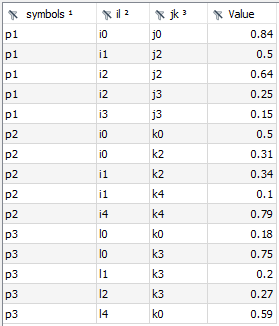

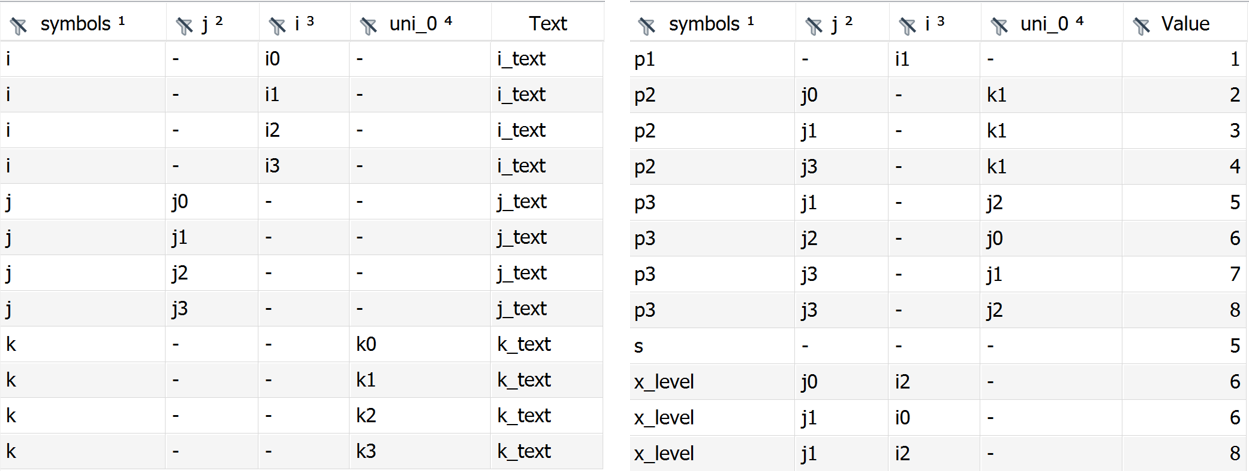

The Concatenate agent allows concatenating multiple symbols (sets or parameters) in the Connect database into a single symbol of the same type. It takes the union of domain sets of all concatenated symbols and uses that as the domain for the output symbol. There are several options to guide this domain finding process which are explained below. The general idea is best explained with an example. Consider three parameters p1(i,j), p2(k,i), and p3(k,l). The union of all domain sets is i, j, k, and l and, hence, the output symbol will be parameterOutput(symbols,i,j,k,l). The very first index of parameterOutput contains the name of the concatenated symbol followed by the domain sets. If a domain set is not used by a concatenated symbol the corresponding records in parameterOutput will feature the emptyUel, a - (dash) by default, as the following figures show:

The Concatenate agent is especially useful in combination with UI components that provide a pivot table, like GAMS MIRO, to represent many individual output symbols in a single powerful and configurable table format.

Obviously, there are more complex situations with respect to the domain of the resulting parameterOutput. For example, only a subset of domain sets are relevant and the remaining ones should be combined in as few index positions as possible. For this, assume only domain sets i and k from the above example are relevant and j and l can be combined in a single index position - a so-called universal domain. The resulting parameterOutput would look as follows:

Moreover, the Concatenate agent needs to deal with universe domain sets * and domain sets that are used multiple times in a concatenated symbol. In addition to the symbols index (always the first index position of the output symbol), by default the union of domain sets of the concatenated symbols determine the domain of the output symbol. If a domain set (including the universe *) appears multiple times in a concatenated symbol domain, these duplicates will be part of the output symbol domain. For example, q1(*,i,j,*) and q2(*,i,i) will result in the output symbol parameterOutput(symbols,*,i,j,*,i,) by default, mapping index positions 1 to 4 of q1 to positions 2 to 5 of parameterOutput and index positions 1 to 3 of q2 to 2, 3, and 6.

All the described situations can be configured with a few options of the agent. The option outputDimensions allows to control the domain of the output symbol. The default behavior (outputDimension: all) gets the domain sets from the concatenated symbols and builds the union with duplicates if required. Alternatively, outputDimensions can be a list of the relevant domain sets (including an empty list). In any case, the agent iterates through the concatenated symbols and maps the index positions of a concatenated symbol into the index positions of the output symbol using the domain set names. Names not present in outputDimensions will be added as universal domains. Per default, the domain set names of a concatenated symbol will be the original domain set names as stored in the Connect database. There are two ways to adjust the domain set names of concatenated symbols: dimensionMap and an explicitly given domain for a symbol in the name option. The dimensionMap which is given once and holds for all symbols allows to map original domain names of concatenated symbols to the desired domain names. The name option provides such a map by symbol and via the index position rather than the domain names of the concatenated symbol. In the above example with p1(i,j), p2(k,i), and p3(k,l), we could put indices i and l as well as j and k together resulting in the following output symbol:

This can be accomplished in two ways: either we use dimensionMap: {i: il, l: il, j: jk, k: jk} or we use name: p1(il,jk), name: p2(jk,il), and name: p3(jk,il) to explicitly define the domain names for each symbol. Note that it is not required to set outputDimensions: [il,jk] since per default the union of domain sets is built using the mapped domain names. In case a domain set is used more than once in a domain of a concatenated symbol the mapping goes from left to right to find the corresponding output domain. If this is not desired, the Projection agent can be used to reorder index positions in symbols or explicit index naming can be used. In the example with q1(*,i,j,*) and q2(*,i,i), the second index position of q2 will be put together with the second index position of q1. If one wants to map the second i of q2 (in the third index position) together with the i of q1 (in second index position), one can, e.g. do with name: q1(*,i,j,*), and name: q2(*,i2,i).

- Note

- The Concatenate agent creates result symbols

parameterOutputandsetOutputfor parameters and sets separately. Both have the same output domain. If different output domains forparameterOutputandsetOutputare desired, use two instantiations of the Concatenate agent. - Variables and equations need to be turned into parameters with the Projection agent before they can be concatenated.

- If option

nameis given without an explicit domain for the concatenated symbol, the domain names stored in the Connect container are used and mapped via thedimensionMapoption, if provided. - A domain set of a concatenated symbol that cannot be assigned to an index in

outputDimensionswill be mapped to a so-called universal domain. The Concatenate agent automatically adds as many universal domains as required to the output symbols.

- The Concatenate agent creates result symbols

Here is an example that uses the Concatenate agent:

Sets

i(i) / i0*i3 "i_text" /

j(j) / j0*j3 "j_text" /

k(k) / k0*k3 "k_text" /;

Parameters

p1(i) / i1 1 /

p2(k,j) / k1.j0 2, k1.j1 3, k1.j3 4 /

p3(j,j) / j1.j2 5, j2.j0 6, j3.j1 7, j3.j2 8 /

s / 5 /;

Positive Variable x(i,j);

x.l(i,j)$(uniform(0,1)>0.8) = uniformint(0,10);

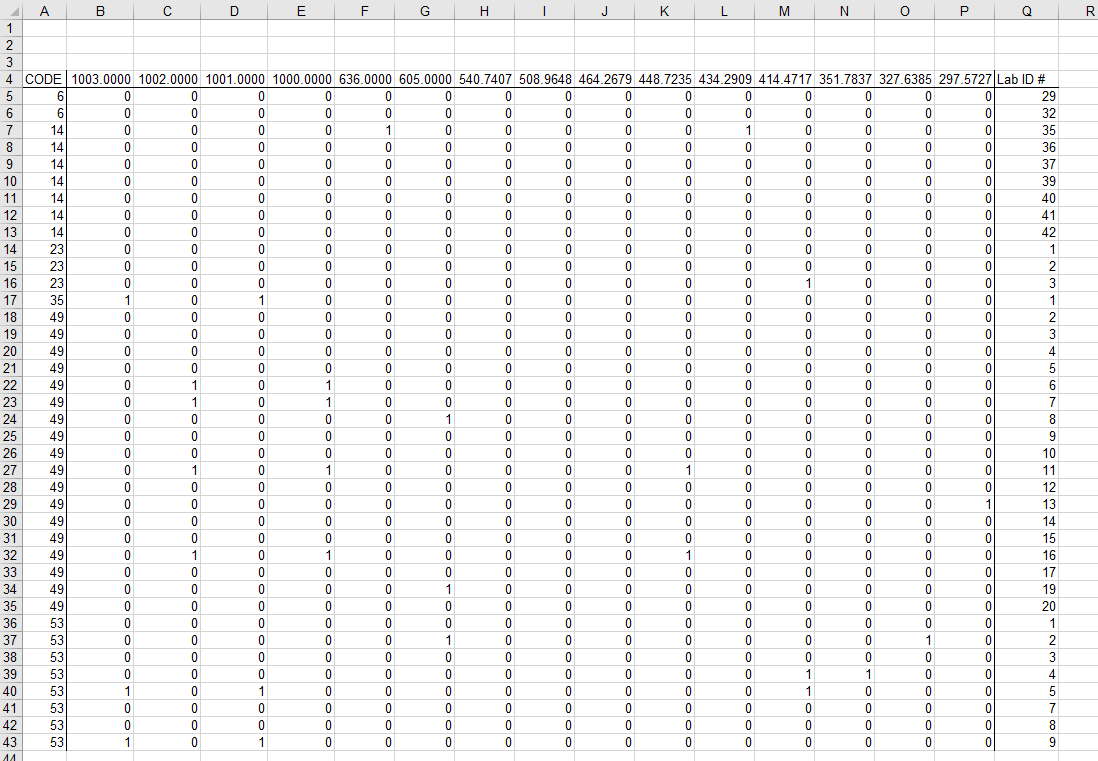

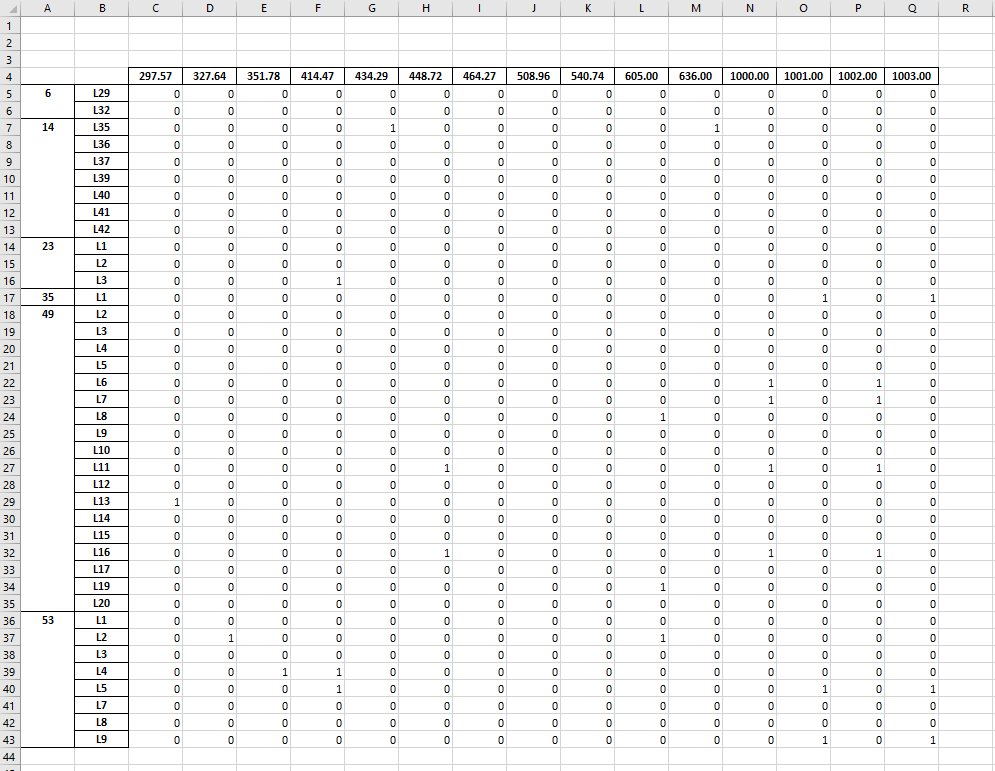

The resulting set and parameter outputs look as follows:

The following options are available for the Concatenate agent.

| Option | Scope | Default | Description |

|---|---|---|---|

| dimensionMap | root | null | Define a mapping for the domain names of concatenated symbols as stored in the Connect database to the desired domain names. |

| emptyUel | root | - | Define a character to use for empty uels. |

| name | symbols | Specify the name of the symbol with potentially index space. | |

| newName | symbols | null | Specify a new name for the symbol in the symbols dimension of the output symbol. |

| outputDimensions | root | all | Define the dimensions of the output symbols. |

| parameterName | root | parameterOutput | Name of the parameter output symbol. |

| setName | root | setOutput | Name of the set output symbol. |

| skip | root | null | Indicate if sets or parameters should be skipped. |

| symbols | root | all | Specify symbol specific options. |

| symbolsDimension | root | True | Specify if output symbols should have a symbols dimension. |

| trace | root | 0 | Specify the trace level for debugging output. |

| universalDimension | root | uni | Specify the base name of universal dimensions. |

Detailed description of the options:

dimensionMap: dictionary (default: null)

Define a mapping for domain names of concatenated symbols as stored in the Connect database to the desired domain names. For example,

dimensionMap: {i: ij, j: ij}will map both symbol domainsiandjtoij.

Define a character to use for empty uels.

Specify the name of the symbol with potentially index space. Requires the format

symName(i1,i2,...,iN). The index space may be specified to establish a mapping for the domain names of the symbol as stored in the Connect database to the desired domain names. If no index space is provided, the domain names stored in the Connect data are used and mapped via thedimensionMapoption if provided.

newName: string (default: null)

Specify a new name for the symbol in the

symbolsdimension of the output symbol.

outputDimensions: all, list of strings (default: all)

Define the dimensions of the output symbols explicitly using a list, e.g.

outputDimensions: [i,j]. The defaultallgets the domain sets from the concatenated symbols and builds the union with duplicates if required.

parameterName: string (default: parameterOutput)

Name of the parameter output symbol.

setName: string (default: setOutput)

Name of the set output symbol.

skip: set, par (default: null)

Indicate if sets or parameters should be skipped. Per default the agent takes both sets and parameters into account (if both are available via symbols) and generates a set and parameter output symbol with the same domain. If

setis specified, the sets will be skipped, i.e. sets are not taken into account for setting up the domain and no set output symbol will be generated. Ifparis specified, the parameters will be skipped.

symbols: all, list of symbols (default: all)

A list containing symbol specific options. Allows to concatenate a subset of symbols. The default

allconcatenates all sets and parameters in the given database.

symbolsDimension: boolean (default: True)

Specify if output symbols should have a

symbolsdimension that contains the input symbol names. IfTrue, the Concatenate agent adds asymbolsdimension at the first index position. IfFalse, nosymbolsdimension will be added.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

universalDimension: string (default: uni)

Specify the base name of universal dimensions.

CSVReader

The CSVReader allows reading a symbol (set or parameter) from a specified CSV file into the Connect database. Its implementation is based on the pandas.DataFrame class and its I/O API method read_csv. See getting started example for a simple example that uses the CSVReader.

| Option | Default | Description |

|---|---|---|

| autoColumn | null | Generate automatic column names. |

| autoRow | null | Generate automatic row labels. |

| decimalSeparator | . (period) | Specify a decimal separator. |

| fieldSeparator | , (comma) | Specify a field separator. |

| file | Specify a CSV file path. | |

| header | infer | Specify the header(s) used as the column names. |

| indexColumns | null | Specify columns to use as the row labels. |

| indexSubstitutions | null | Dictionary used for substitutions in the index columns. |

| name | Specify a symbol name for the Connect database. | |

| names | null | List of column names to use. |

| quoting | 0 | Control field quoting behavior. |

| readCSVArguments | null | Dictionary containing keyword arguments for the pandas.read_csv method. |

| skipRows | null | Specify the rows to skip or the number of rows to skip. |

| stack | infer | Stacks the column names to index. |

| thousandsSeparator | null | Specify a thousands separator. |

| trace | 0 | Specify the trace level for debugging output. |

| type | par | Control the symbol type. |

| valueColumns | null | Specify columns to get the values from. |

| valueSubstitutions | null | Dictionary used for substitutions in the value columns. |

Detailed description of the options:

autoColumn: string (default: null)

Generate automatic column names. The

autoColumnstring is used as the prefix for the column label numbers. This option overrides the use of aheaderornames. However, if there is a header row, one must skip the row by enablingheaderor usingskipRows.

autoRow: string (default: null)

Generate automatic row labels. The

autoRowstring is used as the prefix for the row label numbers. The generated unique elements will be used in the first index position shifting other elements to the right. UsingautoRowcan be helpful when there are no labels that can be used as unique elements but also to store entries that would be a duplicate entry without a unique row label.

decimalSeparator: string (default: .)

Specify a decimal separator, e.g.

.(period) or,(comma).

fieldSeparator: string (default: ,)

Specify a field separator, e.g.

,(comma),;(semicolon), or\t(tab).

Specify a CSV file path.

header: infer, boolean, list of integers (default: infer)

Specify the header(s) used as the column names. Default behavior is to infer the column names: if no names are passed the behavior is identical to

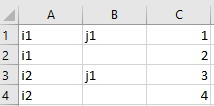

header=Trueand column names are inferred from the first line of data, if column names are passed explicitly then the behavior is identical toheader=False. Explicitly passheader=Trueto be able to replace existing names. Note that missing column names are filled withUnnamed: n(where n is the nth column (zero based) in theDataFrame). Hence, reading the CSV file:,j1, i1,1,2 i2,3,4 ,5,6results in the following 2-dimensional parameter:

j1 Unnamed: 2 i1 1.000 2.000 i2 3.000 4.000For a multi-row header, a list of integers can be passed providing the positions of the header rows in the data. Note that reading multi-row header is only supported for parameters. Moreover, the CSVReader can only read all columns and not a subset of columns, wherefore only

indexColumnscan be specified and all other columns will automatically be read asvalueColumns. Note thatindexColumnscan not be provided as column names together with a multi-row header.autoRowandautoColumnwill be ignored in case of a multi-row header. Here is an example how to read data with a multi-row header:$onecho > multirow_header.csv j,,j1,j1,j1,j2,j2,j2 k,,k1,k2,k3,k1,k2,k3 h,i,,,,,, h1,i1,1,2,,4,5,6 h1,i2,,,3,4,5, $offEchoThe same can be achieved if the data has no index column names:

j,,j1,j1,j1,j2,j2,j2 k,,k1,k2,k3,k1,k2,k3 h1,i1,1,2,,4,5,6 h1,i2,,,3,4,5,If the first line of data after the multi-row header has no data in the

valueColumns, the CSVReader will interpret this line as index column names.

indexColumns: integer, string, list of integers, list of strings (default: null)

Specify columns to use as the row labels. The columns can either be given as column positions or column names. Column positions can be represented as an integer, a list of integers or a string. For example:

indexColumns: 1,indexColumns: [1, 2, 3, 4, 6]orindexColumns: "1:4, 6". The symbolic constantlastColcan be used with the string representation:"2:lastCol". If noheaderornamesis provided,lastColwill be determined by the first line of data. Column names can be represented as a list of strings. For example:indexColumns: ["i1","i2"]. Note thatindexColumnsandvalueColumnsmust either be given as positions or names not both. Further note thatindexColumnsas column names are not supported together with a multi-row header.By default the

pandas.read_csvmethod interprets the following indices asNaN: "", "#N/A", "#N/A N/A", "#NA", "-1.#IND", "-1.#QNAN", "-NaN", "-nan", "1.#IND", "1.#QNAN", "<NA>", "N/A", "NA", "NULL", "NaN", "n/a", "nan", "null". The default can be changed by specifyingpandas.read_csvargumentskeep_default_naandna_valuevia readCSVArguments. Rows with indices that are interpreted asNaNwill be dropped automatically. The indexSubstitutions option allows to mapNaNentries in the index columns.

indexSubstitutions: dictionary (default: null)

Dictionary used for substitutions in the index columns. Each key in

indexSubstitutionsis replaced by its corresponding value. This option allows arbitrary replacements in the index columns of theDataFrameincluding stacked column names. Consider the following CSV file:i1,j1,2.5 i1,,1.7 i2,j1,1.8 i2,,1.4Reading this data into a 2-dimensional parameter results in a parameter with

NaNentries dropped:j1 i1 2.500 i2 1.800By specifying

indexSubstitutions: { .nan: j2 }we can substituteNaNentries byj2:j1 j2 i1 2.500 1.700 i2 1.800 1.400

Specify a symbol name for the Connect database. Note that each symbol in the Connect database must have a unique name.

names: list of strings (default: null)

List of column names to use. If the file contains a header row, then you should explicitly pass

header=Trueto override the column names. Duplicates in this list are not allowed.

quoting: 0, 1, 2, 3 (default: 0)

Control field quoting behavior. Use QUOTE_MINIMAL (

0), QUOTE_ALL (1), QUOTE_NONNUMERIC (2) or QUOTE_NONE (3). QUOTE_NONNUMERIC (2) instructs the reader to convert all non-quoted fields to type float. QUOTE_NONE (3) instructs reader to perform no special processing of quote characters.

readCSVArguments: dictionary (default: null)

Dictionary containing keyword arguments for the pandas.read_csv method. Not all arguments of that method are exposed through the YAML interface of the CSVReader agent. By specifying

readCSVArguments, it is possible to pass arguments directly to thepandas.read_csvmethod that is used by the CSVReader agent. For example,readCSVArguments: {keep_default_na: False, skip_blank_lines: False}.

skipRows: integer, list of integers (default: null)

Specify the rows to skip (list) or the number of rows to skip (integer). For example:

skipRows: [1, 3]orskipRows: 5.

stack: infer, boolean (default: infer)

If

True, stacks the column names to index. With the defaultinfer,stackwill be set toTrueif there is more than one value column, otherwiseFalse. Note that missing column names are filled withUnnamed: n(where n is the nth column (zero based) in theDataFrame).

thousandsSeparator: string (default: null)

Specify a thousands separator.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

Control the symbol type. Supported symbol types are

parfor parameters andsetfor sets.

valueColumns: integer, string, list of integers, list or strings (default: null)

Specify columns to get the values from. The value columns contain numerical values in case of a GAMS parameter and set element text in case of a GAMS set. Note that specifying

valueColumnsis required for parameters (except for parameter data with a multi-row header). The columns can be given as column positions or column names. Column positions can be represented as an integer, a list of integers or a string. For example:valueColumns: 1,valueColumns: [1, 2, 3, 4, 6]orvalueColumns: "1:4, 6". The symbolic constantlastColcan be used with the string representation:"2:lastCol". If noheaderornamesis provided,lastColwill be determined by the first line of data. Column names can be represented as a list of strings. For example:valueColumns: ["i1","i2"]. Note thatvalueColumnsandindexColumnsmust either be given as positions or names not both.By default the

pandas.read_csvmethod interprets the following values asNaN: "", "#N/A", "#N/A N/A", "#NA", "-1.#IND", "-1.#QNAN", "-NaN", "-nan", "1.#IND", "1.#QNAN", "<NA>", "N/A", "NA", "NULL", "NaN", "n/a", "nan", "null". The default can be changed by specifyingpandas.read_csvargumentskeep_default_naandna_valuevia readCSVArguments. Rows with values that are interpreted asNaNwill be dropped automatically. Changing the default of values that are interpreted asNaNis useful if, e.g. "NA" values should not be dropped but interpreted as GAMS special valueNA. Moreover, the valueSubstitutions option allows to mapNaNentries in the value columns.

valueSubstitutions: dictionary (default: null)

Dictionary used for substitutions in the value column. Each key in

valueSubstitutionsis replaced by its corresponding value. The replacement is only performed on the values which is the numerical value in case of a GAMS parameter and the set element text in case of a GAMS set. While it is possible to make arbitrary replacements this is especially useful for controlling sparse/dense reading. AllNaNentries are removed automatically by default which results in a sparse reading behavior. Consider the following CSV file:i1,j1, i1,j2,1.7 i2,j1, i2,j2,1.4Reading this data into a 2-dimensional parameter results in a sparse parameter with all

NaNentries removed:j2 i1 1.700 i2 1.400By specifying

valueSubstitutions: { .nan: EPS }we get a dense representation where allNaNentries are replaced by GAMS special valueEPS:j1 j2 i1 EPS 1.700 i2 EPS 1.400Beside

EPSthere are the following other GAMS special values that can be used by specifying their string representation:INF,-INF,EPS,NA, andUNDEF. See the GAMS Transfer documentation for more information.Let's assume we have data representing a GAMS set:

i1,text1 i2, i3,text3Reading this data into a 1-dimensional set results in a sparse set in which all

NaNentries (those that do not have any set element text) are removed:'i1' 'text 1', 'i3' 'text 3'By specifying

valueSubstitutions: { .nan: '' }we get a dense representation:'i1' 'text 1', 'i2', 'i3' 'text 3'It is also possible to use

valueSubstitutionsin order to interpret the set element text. Let's assume we have the following CSV file:,j1,j2,j3 i1,Y,Y,Y i2,Y,Y,N i3,0,Y,YReading this data into a 2-dimensional set results in a dense set:

'i1'.'j1' Y, 'i1'.'j2' Y, 'i1'.'j3' Y, 'i2'.'j1' Y, 'i2'.'j2' Y, 'i2'.'j3' N, 'i3'.'j1' 0, 'i3'.'j2' Y, 'i3'.'j3' YBy specifying

valueSubstitutions: { 'N': .nan, '0': .nan }we replace all occurrences ofNand0byNaNwhich gets dropped automatically:'i1'.'j1' Y, 'i1'.'j2' Y, 'i1'.'j3' Y, 'i2'.'j1' Y, 'i2'.'j2' Y, 'i3'.'j2' Y, 'i3'.'j3' Y

CSVWriter

The CSVWriter allows writing a symbol (set or parameter) in the Connect database to a specified CSV file. Variables and equations need to be turned into parameters with the Projection agent before they can be written. See getting started example for a simple example that uses the CSVWriter.

| Option | Default | Description |

|---|---|---|

| decimalSeparator | . (period) | Specify a decimal separator. |

| file | Specify a CSV file path. | |

| fieldSeparator | , (comma) | Specify a field separator. |

| header | True | Indicate if the header will be written. |

| name | Specify the name of the symbol in the Connect database. | |

| quoting | 0 | Control field quoting behavior. |

| setHeader | null | Specify a string that will be used as the header. |

| skipText | False | Indicate if the set element text will be skipped. |

| toCSVArguments | null | Dictionary containing keyword arguments for the pandas.to_csv method. |

| trace | 0 | Specify the trace level for debugging output. |

| unstack | False | Specify the dimensions to be unstacked to the header row(s). |

| valueSubstitutions | null | Dictionary used for mapping in the value column of the DataFrame. |

Detailed description of the options:

decimalSeparator: string (default: .)

Specify a decimal separator, e.g.

.(period) or,(comma).

- Note

- Changing the decimal separator (e.g. to

,) will have no effect if the value column contains non-numerical values. This can be the case if either numerical values are replaced with strings using valueSubstitutions or in case of special values which get translated into strings by default.

Specify a CSV file path.

fieldSeparator: string (default: ,)

Specify a field separator, e.g.

,(comma),;(semicolon), or\t(tab).

header: boolean (default: True)

Indicate if the header will be written.

Specify the name of the symbol in the Connect database.

quoting: 0, 1, 2, 3 (default: 0)

Control field quoting behavior. Use QUOTE_MINIMAL (

0), QUOTE_ALL (1), QUOTE_NONNUMERIC (2) or QUOTE_NONE (3). QUOTE_MINIMAL (0) instructs the writer to only quote those fields which contain special characters such asfieldSeparator. QUOTE_ALL (1) instructs the writer to quote all fields. QUOTE_NONNUMERIC (2) instructs the writer to quote all non-numeric fields. QUOTE_NONE (3) instructs the writer to never quote fields.

setHeader: string (default: null)

Specify a string that will be used as the header. If an empty header is desired, the string can be empty.

skipText: boolean (default: False)

Indicate if the set element text will be skipped. If

False, the set element text will be written in the last column of the CSV file.

toCSVArguments: dictionary (default: null)

Dictionary containing keyword arguments for the pandas.to_csv method. Not all arguments of that method are exposed through the YAML interface of the CSVWriter agent. By specifying

toCSVArguments, it is possible to pass arguments directly to thepandas.to_csvmethod that is used by the CSVWriter agent. For example,toCSVArguments: {index_label: ["index1", "index2", "index3"]}.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

unstack: boolean, list of integers (default: False)

Specify the dimensions to be unstacked to the header row(s). If

False(default) no dimension will be unstacked to the header row. IfTruethe last dimension will be unstacked to the header row. If multiple dimensions should be unstacked to header rows, a list of integers providing the dimension numbers to unstack can be specified.

valueSubstitutions: dictionary (default: null)

Dictionary used for mapping in the value column of the

DataFrame. Each key invalueSubstitutionsis replaced by its corresponding value. The replacement is only performed on the value column of theDataFramewhich is the numerical value in case of a GAMS parameter and the set element text in case of a GAMS set. Note that for parameters the CSVWriter automatically converts numerical GAMS special values to their string representation, i.e.INF,-INF,EPS,NA, andUNDEF. If the GAMS special values should be replaced by custom values, use the string representation (upper case) in the dictionary. For example, specify{'EPS': 0}to replace GAMS special valueEPSby zero. See the GAMS Transfer documentation for more information on GAMS special values.

DomainWriter

The DomainWriter agent allows to rewrite domain information for existing Connect symbols and helps dealing with domain violations.

Here is an example that uses the DomainWriter agent:

Set i / i1*i2 /, ii(i) / i1 /;

Parameter a(i) / i1 1, i2 2 /, b(i) / i1 1, i2 2 /;

In this example, the DomainWriter is used to modify the one-dimensional parameters a and b. For parameter a, a regular domain with domain set ii (a subset of set i) is established. Since parameter a still has the (i2 2.0) record, the parameter now contains a domain violation as i2 is not part of the new regular domain ii. While the Connect database in general can hold symbols with domain violations, this is not the case for GAMS or GDX. Since the symbols are later written to GDX, dropDomainViolations: after is specified instructing the DomainWriter to drop all domain violations after the new domain is applied. For parameter b, a relaxed domain 'ii' is established. This means that the universal domain * is established while using ii as the relaxed domain name. As the new domain is relaxed, no domain violations are introduced.

After the DomainWriter parameter a and b look as follows:

Set a:

ii value

0 i1 1.0

Set b:

ii value

0 i1 1.0

1 i2 2.0

| Option | Scope | Default | Description |

|---|---|---|---|

| dropDomainViolations | root/symbols | False | Indicate how to deal with domain violations. |

| name | symbols | Specify the name of the symbol in the Connect database. | |

| symbols | root | all | Specify symbol specific options. |

| trace | root | 0 | Specify the trace level for debugging output. |

Detailed description of the options:

dropDomainViolations: after, before, boolean (default: False)

The Connect symbols might have some domain violations. This agent allows to drop these domain violations so a write to GAMS or GDX works properly. Indicate how to deal with domain violations that can cause issues in GAMS or GDX. Per default domain violations will not be dropped. Setting

dropDomainViolationstoafterwill drop domain violations after a new domain is applied. Setting the option tobeforewill drop domain violations before a new domain is applied. Setting the option toTruewill drop domain violations before and after a new domain is applied. The DomainWriter agent does not necessarily need to be used to rewrite domain information for specific symbols but can also handle domain violations for all symbols in the Connect database. Withsymbols: allanddropDomainViolations: Truethe agent drops domain violations from all symbols in the Connect database. In this case,afterandbeforewill have the same effect asTrue.

Specify a symbol name with index space for the Connect database.

namerequires the formatsymName(i1,i2,...,iN). The list of indices needs to coincide with the names of the actual GAMS domain sets for a regular domain. A relaxed domain is set if the index is quoted. For examplename: x(i,'j')means that for the first index a regular domain with domain setiis established, while for the second index the universal domain*is used and a relaxed domain namejis set.

symbols: all, list of symbols (default: all)

A list containing symbol specific options or

allfor applying the DomainWriter to all symbols in the Connect database.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

ExcelReader

The ExcelReader agent allows to read symbols (sets and parameters) from an Excel file into the Connect database. See getting started example for a simple example that uses the ExcelReader.

- Note

- The ExcelReader supports

.xlsxand.xlsmfiles.

| Option | Scope | Default | Description |

|---|---|---|---|

| autoMerge | root/symbols | False | Indicate if empty cells in the labels should be merged with previous cells. |

| columnDimension | root/symbols | 1 | Column dimension of the symbol. |

| file | root | Specify an Excel file path. | |

| ignoreColumns | symbols | null | Columns to be ignored when reading. |

| ignoreRows | symbols | null | Rows to be ignored when reading. |

| ignoreText | root/symbols | infer | Indicate if the set element text should be ignored. |

| index | root | Specify the Excel range for reading symbols and options directly from the spreadsheet. | |

| indexSubstitutions | root/symbols | null | Dictionary used for substitutions in the row and column index. |

| mergedCells | root/symbols | False | Control the handling of empty cells in the labels and the values that are part of a merged Excel range. |

| name | symbols | Specify the name of the symbol in the Connect database. | |

| range | symbols | [name]!A1 | Specify the Excel range of a symbol. |

| rowDimension | root/symbols | 1 | Row dimension of the symbol. |

| skipEmpty | root/symbols | 1 | Number of empty rows or columns to skip before the next empty row or column indicates the end of the block for reading with open ranges. |

| symbols | root | Specify symbol specific options. | |

| trace | root | 0 | Specify the trace level for debugging output. |

| type | root/symbols | par | Control the symbol type. |

| valueSubstitutions | root/symbols | null | Dictionary used for mapping in the values. |

Detailed description of the options: autoMerge: boolean (default: False)

Indicate if empty cells in the labels should be merged with previous cells. If

True, each empty cell in the labels will be filled with the value of a previous cell that is not empty. This will resolve merged cells in the labels and will also fill empty cells in the labels that are not part of a merged cell. If the user wants to keep empty cells in the labels that are not part of a merged cell and still resolve merged cells,autoMergeneeds to be set toFalseand mergedCells needs to be set toTrue.

columnDimension: integer (default: 1)

The number of rows in the data range that will be used to define the labels for columns. The first

columnDimensionrows of the data range will be used for labels.

Specify an Excel file path.

ignoreColumns: integer, string, list of integers and strings (default: null)

Columns to be ignored when reading the file. They can be specified by any of the following methods:

- Single column: Use a single column number (e.g.

ignoreColumns: 3) or letter (e.g.ignoreColumns: C).- Ranges: Specify ranges in the format

start:end. Ranges can include:

- Integers (e.g.

ignoreColumns: "2:6")- Letters (e.g.

ignoreColumns: "B:F")- A mixture of both (e.g.

ignoreColumns: "B:6")- A list of columns: Include multiple column numbers, letters, and/or ranges (e.g.

ignoreColumns: [2, "D", "7:10"]).

ignoreRows: integer, string, list of integers and strings> (default: null)

Specify rows to be ignored when reading. You can specify:

- Single row: Use a single row number (e.g.

ignoreRows: 4).- Ranges: Specify ranges in the format

start:end. Ranges should only include integers (e.g.ignoreRows: "2:6").- A list of rows: Include multiple row numbers and/or ranges (e.g.

ignoreRows: [2, 5, "7:10"].)

ignoreText: infer, boolean (default: infer)

Indicate if the set element text should be ignored. With the default

inferthe set element text will be ignored based on the specification of range and columnDimension/rowDimension. If eithercolumnDimensionorrowDimensionis set to0and an open range is specified, the set element text will be ignored. If eithercolumnDimensionorrowDimensionis set to0and a full range is specified, the set element text will be ignored if it is not included in the range specification. In all other cases the set element text will not be ignored.

index: string (required: index or symbols) (excludes: symbols)

Specify the Excel range for reading symbols options directly from the spreadsheet similar to GDXXRWs index option. The general structure of an index sheet follows roughly the rules of

GDXXRW, but there are also options that are either not supported at all or work differently.

- Supported options:

rdim/rowDimensioncdim/columnDimensionse/skipEmptyignoreTextautoMergeignoreRowsignoreColumnsmergedCells- All supported options are processed case insensitive.

- All unsupported options are ignored.

- No support for

dSet.- No support for

dimbutrDim/rowDimensionandcDim/columnDimensiononly.- Missing values for

rDim/rowDimensionandcDim/columnDimensionwill not have theGDXXRWdefaults but will use the root-scoped values of rowDimension and columnDimension. When migrating fromGDXXRWto Connect it is best to explicitly specify those values in the index sheet instead of relying on default value.- Per default

GDXXRWdoes not store zeros, but the ExcelReader does. In order to change this, one has to specifyvalueSubstitutions: {0: .nan}in the root scope which will drop all records with value0.This option can not be specified together with symbols.

indexSubstitutions: dictionary (default: null)

Dictionary used for substitutions in the row and column index. Each key in

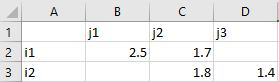

indexSubstitutionsis replaced by its corresponding value. This option allows arbitrary replacements in the index. Consider the following Excel spreadsheet: Two dimensional data containing NaN entries in the index

Two dimensional data containing NaN entries in the indexReading this data into a 2-dimensional parameter results in a parameter with

NaNentries dropped:j1 i1 1.000 i2 3.000By specifying

indexSubstitutions: { .nan: j2 }we can substituteNaNentries byj2:j1 j2 i1 1.000 2.000 i2 3.000 4.000

mergedCells: boolean (default: False)

Control the handling of empty cells that are part of a merged Excel range. The option applies to merged cells in the labels and the values, i.e. the numerical values in case of a GAMS parameter and the set element text in case of a GAMS set. If

False, the cells are left empty. IfTrue, the merged label/value is used in all cells. Note that setting this option toTruehas an impact on performance since the Excel file has to be opened in a non-read-only mode that results in non-constant memory consumption (no lazy loading). From the performance perspective, autoMerge should be preferred overmergedCellsif applicable.

Specify a symbol name for the Connect database. Note that each symbol in the Connect database must have a unique name.

range: string (default: [name]!A1)

Specify the Excel range of a symbol using the format

sheetName!cellRange.cellRangecan be either a single cell also known as an open range (north-west corner likeB2) or a full range (north-west and south-east corner likeB2:D4). Per default the ExcelReader uses the range[name]!A1, where[name]is the name of the symbol that is read. If onlysheetName!is specified, the ExcelReader will use an open range starting at cellA1. The ExcelReader also allows for named ranges - a named range includes a sheet name and a cell range. Before interpreting the providedrangeattribute, the string will be used to search for a pre-defined Excel range with that name.

rowDimension: integer (default: 1)

The number of columns in the data range that will be used to define the labels for the rows. The first

rowDimensioncolumns of the data range will be used for the labels.

skipEmpty: integer (default: 1)

Number of empty rows or columns to skip before the next empty row or column indicates the end of the block for reading with open ranges. If a full range is specified

skipEmptywill be ignored.

symbols: list of symbols (required: index or symbols) (excludes: index)

A list containing symbol specific options.

This option can not be specified together with index.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

Control the symbol type. Supported symbol types are

parfor parameters andsetfor sets.

valueSubstitutions: dictionary (default: null)

Dictionary used for substitutions in the value column. Each key in

valueSubstitutionsis replaced by its corresponding value. The replacement is only performed on the values which is the numerical value in case of a GAMS parameter and the set element text in case of a GAMS set. While it is possible to make arbitrary replacements, this is especially useful for controlling sparse/dense reading. AllNaNentries are removed automatically by default which results in a sparse reading behavior. Let's assume we have the following spreadsheet: Two dimensional data containing NaN entries

Two dimensional data containing NaN entriesReading this data into a 2-dimensional parameter results in a sparse parameter in which all

NaNentries are removed:'i1'.'j1' 2.5, 'i1'.'j2' 1.7, 'i2'.'j2' 1.8, 'i2'.'j3' 1.4By specifying

valueSubstitutions: { .nan: EPS }we get a dense representation in which allNaNentries are replaced by GAMS special valueEPS:'i1'.'j1' 2.5, 'i1'.'j2' 1.7, 'i1'.'j3' Eps, 'i2'.'j1' Eps, 'i2'.'j2' 1.8, 'i2'.'j3' 1.4Beside

EPSthere are the following other GAMS special values that can be used by specifying their string representation:INF,-INF,EPS,NA, andUNDEF. See the GAMS Transfer documentation for more information.Let's assume we have data representing a GAMS set:

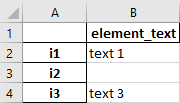

Data representing a GAMS set

Data representing a GAMS setReading this data into a 1-dimensional set results in a sparse set in which all

NaNentries (those that do not have any set element text) are removed:'i1' 'text 1', 'i3' 'text 3'By specifying

valueSubstitutions: { .nan: '' }we get a dense representation:'i1' 'text 1' 'i2' '' 'i3' 'text 3'It is also possible to use

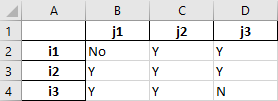

valueSubstitutionsin order to interpret the set element text. Let's assume we have the following Excel data: Data representing a GAMS set

Data representing a GAMS setReading this data into a 2-dimensional set results in a dense set:

'i1'.'j1' No, 'i1'.'j2' Y, 'i1'.'j3' Y, 'i2'.'j1' Y, 'i2'.'j2' Y, 'i2'.'j3' Y, 'i3'.'j1' Y, 'i3'.'j2' Y, 'i3'.'j3' NBy specifying

valueSubstitutions: { 'N': .nan, 'No': .nan }we replace all occurrences ofNandNobyNaNwhich gets dropped automatically. Note thatNohas to be quotes in order to not be interpreted asFalseby the YAML parser:'i1'.'j2' Y, 'i1'.'j3' Y, 'i2'.'j1' Y, 'i2'.'j2' Y, 'i2'.'j3' Y, 'i3'.'j1' Y, 'i3'.'j2' Y

ExcelWriter

The ExcelWriter agent allows to write symbols (sets and parameters) from the Connect database to an Excel file. Variables and equations need to be turned into parameters with the Projection agent before they can be written. If the Excel file exists, the ExcelWriter appends to the existing file. See getting started example for a simple example that uses the ExcelWriter.

- Note

- The ExcelWriter only supports

.xlsxfiles.

- Attention

- Please be aware of the following limitation when appending to an Excel file with formulas using the ExcelWriter: Whereas Excel stores formulas and the corresponding values, the ExcelReader and the ExcelWriter read/store either formulas or values, not both. As a consequence, when appending to an Excel file with formulas, all cells with formulas within the Excel file will not have values anymore and a subsequent read by the ExcelReader results into

NaNfor these cells. To avoid this, write to a separate output Excel file. On Windows one can merge the input Excel file with the output Excel file at the end using the tool win32.ExcelMerge (see Connect Example for Excel (executeTool win32.ExcelMerge)). An alternative approach when appending to an Excel file with formulas is to open and save the Excel file before reading it to let Excel evaluate formulas and restore the corresponding values.

| Option | Scope | Default | Description |

|---|---|---|---|

| clearSheet | root/symbols | False | Indicate if a sheet should be cleared before writing if it exists. |

| columnDimension | root/symbols | infer | Column dimension of the symbol. |

| emptySymbols | tableOfContents | False | Controls if empty symbols should be listed in the table of contents. |

| file | root | Specify an Excel file path. | |

| index | root | null | Specify the Excel range for reading symbols and options directly from the spreadsheet. |

| mergedCells | root/symbols | False | Write merged cells. |

| name | symbols | Specify the name of the symbol in the Connect database. | |

| range | symbols | [name]!A1 | Specify the Excel range of a symbol. |

| sheetName | tableOfContents | Table Of Contents | Specify the sheet name containing the table of contents. |

| sort | tableOfContents | False | Controls if symbol names in the table of contents are sorted alphabetically. |

| symbols | root | all | Specify symbol specific options. |

| tableOfContents | root | False | Controls the writing of a table of contents. |

| trace | root | 0 | Specify the trace level for debugging output. |

| valueSubstitutions | root/symbols | null | Dictionary used for mapping in the value column of the DataFrame. |

Detailed description of the options:

clearSheet: boolean (default: False)

Indicate if a sheet should be cleared before writing if it exists. The default is

Falsewhere a sheet will not be cleared if it exists, instead the ExcelWriter overwrites, i.e. writes content to the sheet without removing the existing content.

columnDimension: infer, integer (default: infer)

The last

columnDimensionindex positions of the symbol that will be written to the rows that define the labels of the columns. The firstdim-columnDimensionindex positions will be written to the columns that define the labels for the rows. With the defaultinfer,columnDimensionwill be set to1ifdim>0and otherwise to0.

emptySymbols: boolean (default: False)

If

Trueempty symbols will be contained in the table of contents. Since empty symbols will not be written to the Excel file, those entries will not contain a link.

Specify an Excel file path.

Specify the Excel range for reading symbols options directly from the spreadsheet similar to GDXXRWs index option. If specified, symbols is ignored. The general structure of an index sheet follows roughly the rules of

GDXXRW, but there are also options that are either not supported at all or work differently.

- Supported options:

cdim/columnDimensionmergedCellsclearSheet- All supported options are processed case insensitive.

- All unsupported options are ignored.

- No support for

dimbut andrDim/rowDimensionbutcDim/columnDimensiononly.- Missing value for

cDim/columnDimensionwill use the root-scoped value of columnDimension. When migrating fromGDXXRWto Connect it is best to explicitly specify this value in the index sheet instead of relying on the default value.

mergedCells: boolean (default: False)

Write merged cells. Please be aware that overwriting already existing merged cells in a sheet may cause problems, in that case consider setting clearSheet to

True.

Specify a symbol name for the Connect database.

range: string (default: [name]!A1)

Specify the Excel range of a symbol using the format

sheetName!cellRange.cellRangecan be either a single cell (north-west corner likeB2) or a full range (north-west and south-east corner likeB2:D4). Per default the ExcelWriter uses the range[name]!A1, where[name]is the name of the symbol that is written. If onlysheetName!is specified, the ExcelWriter will use an open range starting at cellA1. The ExcelWriter also allows for named ranges - a named range includes a sheet name and a cell range. Before interpreting the providedrangeattribute, the string will be used to search for a pre-defined Excel range with that name.

sheetName: string (default: Table Of Contents)

Specify the sheet name which will contain the table of contents.

sort: boolean (default: False)

If

Truethe table of contents is sorted by symbol name in alphabetical order. IfFalsethe order is determined from the order given in symbols or the order of symbols in the Connect database in casesymbols: all.

symbols: all, list of symbols (default: all)

A list containing symbol specific options. The default

allwrites each parameter and set into a separate sheet using the range[name]!A1. If specified, index takes precedence oversymbols.

tableOfContents: boolean, dictionary (default: False)

If

Truewrites a table of contents into sheetTable Of Contents. If a dictionary is provided, valid keys are sheetName, sort and emptySymbols. The sheet used for the table of contents is always cleared before writing.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

valueSubstitutions: dictionary (default: null)

Dictionary used for mapping in the value column of the

DataFrame. Each key invalueSubstitutionsis replaced by its corresponding value. The replacement is only performed on the value column of theDataFramewhich is the numerical value in case of a GAMS parameter and the set element text in case of a GAMS set. Note that for parameters the ExcelWriter automatically converts numerical GAMS special values to their string representation, i.e.INF,-INF,EPS,NA, andUNDEF. If the GAMS special values should be replaced by custom values, use the string representation (upper case) in the dictionary. For example, specify{'EPS': 0}to replace GAMS special valueEPSby zero. See the GAMS Transfer documentation for more information on GAMS special values.

Filter

The Filter agent allows to reduce symbol data by applying filters on labels and numerical values. Here is an example that uses the Filter agent:

Set i / seattle, san-diego /

j / new-york, chicago, topeka /;

Parameter d(i,j) /

seattle.new-york 2.5

seattle.chicago 1.7

seattle.topeka 1.8

san-diego.new-york 2.5

san-diego.chicago 1.8

san-diego.topeka 1.4

/;

The records of the parameter d are filtered and stored in a new parameter called d_new. Two label filters remove all labels except seattle from the first dimension and remove the label topeka from the second one. The remaining records are filtered by value where only values less than 2.5 are kept in the data. The resulting parameter d_new which is exported into report.gdx has only one record (seattle.chicago 1.7) left.

The following options are available for the Filter agent:

| Option | Scope | Default | Description |

|---|---|---|---|

| attribute | valueFilters | all | Specify the attribute to which a value filter is applied. |

| dimension | labelFilters | all | Specify the dimension to which a label filter is applied. |

| keep | labelFilters | Specify a list of labels to keep. | |

| labelFilters | root | null | Specify filters for index columns of a symbol. |

| name | root | Specify a symbol name for the Connect database. | |

| newName | root | null | Specify a new name for the symbol in the Connect database. |

| regex | labelFilters | Specify a regular expression to be used for filtering labels. | |

| reject | labelFilters | Specify a list of labels to reject. | |

| rejectSpecialValues | valueFilters | null | Specify the special values to reject. |

| rule | valueFilters | null | Specify a boolean expression to be used for filtering on numerical columns. |

| ruleIdentifier | valueFilters | x | The identifier used for the value filter rule. |

| trace | root | 0 | Specify the trace level for debugging output. |

| valueFilters | root | null | Specify filters for numerical columns of a symbol. |

Detailed description of the options:

attribute: all, value, level, marginal, upper, lower, scale (default: all)

Used to specify the attribute to which a value filter is applied. The following strings are allowed depending on the symbol type:

- Set: Not allowed

- Parameter:

all(default),value- Variable and equation:

all(default),level,marginal,upper,lower,scaleThe default

allwill apply the value filter on all value columns of the symbol.

dimension: all, integer (default: all)

Used to specify the dimension to which a label filter is applied. The default

allwill apply the label filter on all dimensions of the symbol.

keep: list of strings (required: keep or regex or reject) (excludes: regex, reject)

A list of labels to be kept when applying the label filter.

This option can not be specified together with regex or reject.

labelFilters: list of label filters (default: null)

A list containing label filters.

Specify the name of the symbol from the Connect database on whose data the filters will be applied.

newName: string (default: null)

Specify a new name for the symbol in the Connect database which will get the data after all filters have been applied. Each symbol in the Connect database must have a unique name.

regex: string (required: keep or regex or reject) (excludes: keep, reject)

A string containing a regular expression that needs to match in order to keep the corresponding label. Uses a full match paradigm which means that the whole label needs to match the specified regular expression.

This option can not be specified together with keep or reject.

reject: string (required: keep or regex or reject) (excludes: keep, regex)

A list of labels to be rejected when applying the label filter.

This option can not be specified together with keep or regex.

rejectSpecialValues: EPS, +INF, -INF, UNDEF, NA, list of special values (default: null)

Can be used to reject single special values, e.g.

rejectSpecialValues: EPS, or a list of special values, e.g.rejectSpecialValues: [EPS, UNDEF, -INF]. The following string representation of the GAMS special values must be used:INF,-INF,EPS,NAandUNDEF.

Used to specify a boolean expression for a value filter. Each numerical value of the specified dimension is tested and the corresponding record is only kept if the expression evaluates to true. The string needs to contain Python syntax that is valid for

pandas.Series. Comparison operators like>,>=,<,<=,==, or!=can be used in combination with boolean operators like&or|, but notandoror. Note that using&or|requires the operands to be enclosed in round brackets in order to form a valid expression. As an example, the expression((x<=10) & (x>=0)) | (x>20)would keep only those values that are between0and10(included) or greater than20.

ruleIdentifier: string (default: x)

Specifies the identifier that is used in the rule of a value filter.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

valueFilters: list of value filters (default: null)

A list containing value filters.

GAMSReader

The GAMSReader allows reading symbols from the GAMS database into the Connect database. Without GAMS context (e.g. when running the gamsconnect script from the command line) this agent is not available and its execution will result in an exception.

The GAMSReader allows to either read all symbols from the GAMS database:

- GAMSReader:

symbols: all

Or specific symbols only:

- GAMSReader:

symbols:

- name: i

- name: p

newName: p_new

| Option | Scope | Default | Description |

|---|---|---|---|

| name | symbols | Specify the name of the symbol in the GAMS database. | |

| newName | symbols | null | Specify a new name for the symbol in the Connect database. |

| symbols | root | all | Specify symbol specific options. |

| trace | root | 0 | Specify the trace level for debugging output. |

Detailed description of the options:

Specify the name of the symbol in the GAMS database.

newName: string (default: null)

Specify a new name for the symbol in the Connect database. Each symbol in the Connect database must have a unique name.

symbols: all, list of symbols (default: all)

A list containing symbol specific options. Allows to read a subset of symbols. The default

allreads all symbols from the GAMS database into the Connect database.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

GAMSWriter

The GAMSWriter allows writing symbols in the Connect database to the GAMS database. Without GAMS context (e.g. when running the gamsconnect script from the command line) and as part of the connectOut command line option this agent is not available and its execution will result in an exception.

The GAMSWriter allows to either write all symbols in the Connect database to the GAMS database:

- GAMSWriter:

symbols: all

Or specific symbols only:

- GAMSWriter:

symbols:

- name: i

- name: p

newName: p_new

| Option | Scope | Default | Description |

|---|---|---|---|

| domainCheckType | root/symbols | default | Specify if domain checking is applied or if records that would cause a domain violation are filtered. |

| duplicateRecords | root/symbols | all | Specify which record(s) to keep in case of duplicate records. |

| mergeType | root/symbols | default | Specify if data in a GAMS symbol is merged or replaced. |

| name | symbols | Specify the name of the symbol in the Connect database. | |

| newName | symbols | null | Specify a new name for the symbol in the GAMS database. |

| symbols | root | all | Specify symbol specific options. |

| trace | root | 0 | Specify the trace level for debugging output. |

Detailed description of the options:

domainCheckType: checked, default, filtered (default: default)

Specify if Domain Checking is applied (

checked) or if records that would cause a domain violation are filtered (filtered). If left atdefaultit depends on the setting of $on/offFiltered if GAMS does a filtered load or checks the domains during compile time. During execution timedefaultis the same asfiltered.

duplicateRecords: all, first, last, none (default: all)

A symbol in the Connect database can hold multiple records for the same indexes. Such duplicate records are only a problem when exchanging the data with GAMS (and GDX). The attribute determines which record(s) to keep in case of (case insensitive) duplicate records. With the default of

allthe GAMSWriter will fail in case duplicate records exist. Withfirstthe first record will be written to GAMS, withlastthe last record will be written to GAMS. Withnonenone of the duplicate records will be written to GAMS. Note that the agent deals with duplicate records in a case insensitive way.

mergeType: default, merge, replace (default: default)

Specify if data in a GAMS symbol is merged (

merge) or replaced (replace). If left atdefaultit depends on the setting of $on/offMulti[R] if GAMS does a merge, replace, or triggers an error during compile time. During execution timedefaultis the same asmerge.

Specify the name of the symbol in the Connect database.

newName: string (default: null)

Specify a new name for the symbol in the GAMS database. Note, each symbol in the GAMS database must have a unique name.

symbols: all, list of symbols (default: all)

A list containing symbol specific options. Allows to write a subset of symbols. The default

allwrites all symbols from the Connect database into to the GAMS database.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

GDXReader

The GDXReader allows reading symbols from a specified GDX file into the Connect database.

The GDXReader allows to either read all symbols from a GDX file:

- GDXReader:

file: in.gdx

symbols: all

Or specific symbols only:

- GDXReader:

file: in.gdx

symbols:

- name: i

- name: p

newName: p_new

| Option | Scope | Default | Description |

|---|---|---|---|

| file | root | Specify a GDX file path. | |

| name | symbols | Specify the name of the symbol in the GDX file. | |

| newName | symbols | null | Specify a new name for the symbol in the Connect database. |

| symbols | root | all | Specify symbol specific options. |

| trace | root | 0 | Specify the trace level for debugging output. |

Detailed description of the options:

Specify a GDX file path.

Specify the name of the symbol in the GDX file.

newName: string (default: null)

Specify a new name for the symbol in the Connect database. Each symbol in the Connect database must have a unique name.

symbols: all, list of symbols (default: all)

A list containing symbol specific options. Allows to read a subset of symbols. The default

allreads all symbols from the GDX file into the Connect database.

Specify the trace level for debugging output:

trace = 0: No trace output.trace > 0: Log instructions and Connect container status.trace > 1: Log additional scalar output.trace > 2: Log abbreviated intermediate DataFrames.trace > 3: Log entire intermediate DataFrames (potentially large output).

GDXWriter

The GDXWriter allows writing symbols in the Connect database to a specified GDX file.

The GDXWriter allows to either write all symbols in the Connect database to a GDX file:

- GDXWriter:

file: out.gdx

symbols: all

Or specific symbols only:

- GDXWriter:

file: out.gdx

symbols:

- name: i

- name: p

newName: p_new

| Option | Scope | Default | Description |

|---|---|---|---|

| duplicateRecords | root/symbols | all | Specify which record(s) to keep in case of duplicate records. |

| file | root | Specify a GDX file path. | |

| name | symbols | Specify the name of the symbol in the Connect database. | |

| newName | symbols | null | Specify a new name for the symbol in the GDX file. |

| symbols | root | all | Specify symbol specific options. |

| trace | root | 0 | Specify the trace level for debugging output. |

Detailed description of the options:

duplicateRecords: all, first, last, none (default: all)

A symbol in the Connect database can hold multiple records for the same indexes. Such duplicate records are only a problem when exchanging the data with GDX (and GAMS). The attribute determines which record(s) to keep in case of (case insensitive) duplicate records. With the default of

allthe GDXWriter will fail in case duplicate records exist. Withfirstthe first record will be written to GDX, withlastthe last record will be written to GDX. Withnonenone of the duplicate records will be written to GDX. Note that the agent deals with duplicate records in a case insensitive way.

Specify a GDX file path.

Specify the name of the symbol in the Connect database.

newName: string (default: null)

Specify a new name for the symbol in the GDX file. Note, each symbol in the GDX file must have a unique name.

symbols: all, list of symbols (default: all)

A list containing symbol specific options. Allows to write a subset of symbols. The default

allwrites all symbols from the Connect database into to the GDX file.

Specify the trace level for debugging output. For

trace > 1some scalar debugging output will be written to the log. Fortrace > 2the intermediate data frames will be written abbreviated to the log. Fortrace > 3the intermediate data frames will be written entirely to the log (potentially large output).

LabelManipulator

The LabelManipulator agent allows to modify labels of symbols in the Connect database. Four different modes are available: